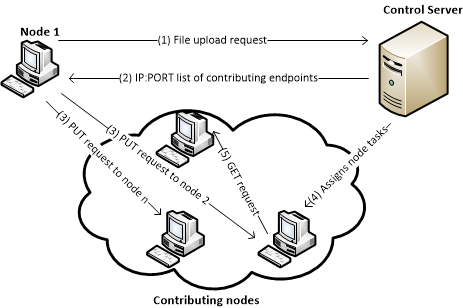

A cloud storage service such as Microsoft SkyDrive requires building data centers as well as operational and maintenance costs. An alternative approach is based on distributed computing model which utilizes portion of the storage and processing resources of consumer level computers and SME NAS devices to form a peer to peer storage system. The members contribute some of their local storage space to the system and in return receive “online backup and data sharing” service. Providing data confidentiality, integrity and availability in such de-centerlized storage system is a big challenge to be addressed. As the cost of data storage devices declines, there is a debate that whether the P2P storage could really be cost saving or not. I leave this debate to the critics and instead I will look into a peer to peer storage system and study its security measures and possible issues. An overview of this system’s architecture is shown in the following picture:

Each node in the storage cloud receives an amount of free online storage space which can be increased by the control server if the node agrees to “contribute” some of its local hard drive space to the system. File synchronisation and contribution agents that are running on every node interact with the cloud control server and other nodes as shown in the above picture. Folder/File synchronisation is performed in the following steps:

1) The node authenticates itself to the control server and sends file upload request with file meta data including SHA1 hash value, size, number of fragments and file name over HTTPS connection.

2) The control server replies with the AES encryption key for the relevant file/folder, a [IP Address]:[Port number] list of contributing nodes called “endpoints list” and a file ID.

3) The file is split into blocks each of which is encrypted with the above AES encryption key. The blocks are further split into 64 fragments and redundancy information also gets added to them.

4) The node then connects to the contribution agent on each endpoint address that was received in step 2 and uploads one fragment to each of them

Since the system nodes are not under full control of the control server, they fall offline any time or the stored file fragments may become damaged/modified intentionally. As such, the control server needs to monitor node and fragment health regularly so that it may move lost/damaged fragments to alternate nodes if need be. For this purpose, the contribution agent on each node maintains an HTTPS connection to the control server on which it receives the following “tasks”:

a) Adjust settings : instructs the node to modify its upload/download limits , contribution size and etc

b) Block check : asks the node to connect to another contribution node and verify a fragment existence and hash value

c) Block Recovery : Assist the control server to recover a number of fragments

By delegating the above task, the control system has placed some degree of “trust” or at least “assumptions” about the availability and integrity of the agent software running on the storage cloud nodes. However, those agents can be manipulated by malicious nodes in order to disrupt cloud operations, attack other nodes or even gain unauthorised access to the distributed data. I limited the scope of my research to the synchronisation and contribution agent software of two storage nodes under my control – one of which was acting as a contribution node. I didn’t include the analysis of the encryption or redundancy of the system in my preliminary research because it could affect the live system and should only be performed on a test environment which was not possible to set up, as the target system’s control server was not publicly available. Within the contribution agent alone, I identified that not only did I have unauthorised access file storage (and download) on the cloud’s nodes, but I had unauthorised access to the folder encryption keys as well.

a) Unauthorised file storage and download

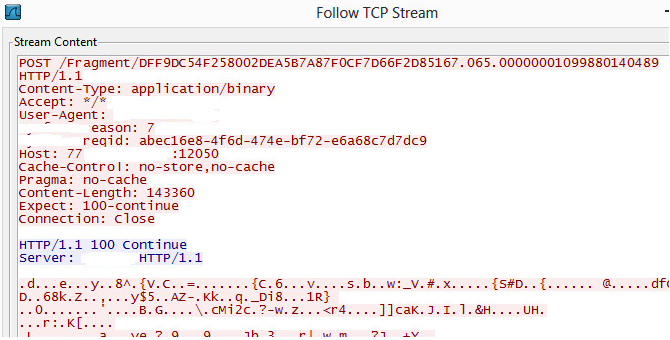

The contribution agent created a TCP network listener that processed commands from the control server as well as requests from other nodes. The agent communicated over HTTP(s) with the control server and other nodes in the cloud. An example file fragment upload request from a remote node is shown below:

Uploading fragments with similar format to the above path name resulted in the “bad request” error from the agent. This indicated that the fragment name should be related to its content and this condition is checked by the contribution agent before accepting the PUT request. By decompiling the agent software code, it was found that the fragment name must have the following format to pass this validation:

<SHA1(uploaded content)>.<Fragment number>.<Global Folder Id>

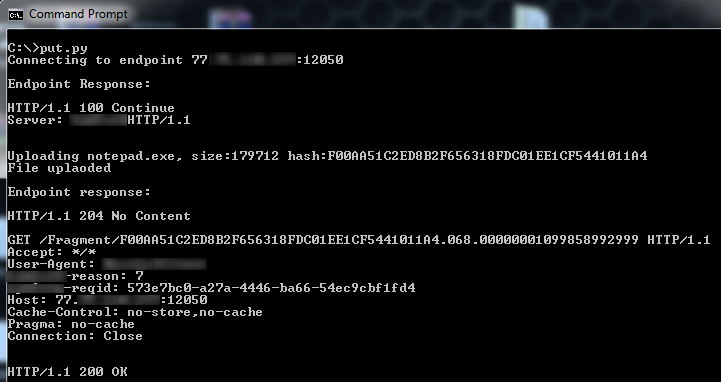

I used the above file fragment format to upload notepad.exe to the remote node successfully as you can see in the following figure:

The download request (GET request) was also successful regardless of the validity of “Global Folder Id” and “Fragment Number”. The uploaded file was accessible for about 24 hours, until it was purged automatically by the contribution agent, probably because it won’t receive any “Block Check” requests for the control server for this fragment. Twenty four hours still is enough time for malicious users to abuse storage cloud nodes bandwidth and storage to serve their contents over the internet without victim’s knowledge.

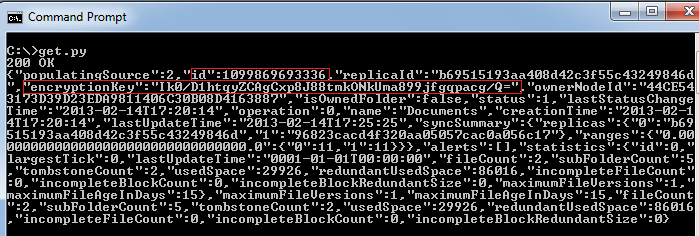

b) Unauthorised access to folder encryption keys

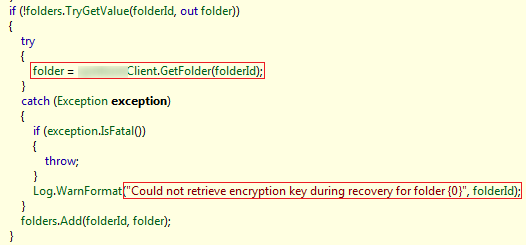

The network listener responded to GET requests from any remote node as mentioned above. This was intended to serve “Block Check” commands from the control server which instructs a node to fetch a number of fragments from other nodes (referred to as “endpoints”) and verify their integrity but re-calculating the SHA1 hash and reporting back to the control server. This could be part of the cloud “health check” process to ensure that the distributed file fragments are accessible and not tampered with. The agent could also process “File Recovery” tasks from the control server but I didn’t observe any such command from the control server during the dynamic analysis of the contribution agent, so I searched the decompiled code for clues on the file recovery process and found the following code snippet which could suggest that the agent is cable of retrieving encryption keys from the control server. This was something odd, considering that each node should only have access to its own folders encryption keys and it stores encrypted file fragments of other nodes.

One possible explanation for the above file recovery code, could be that the node first downloads its own file fragments from remote endpoints (using an endpoint list received from the control server) and then retrieves the required folder encryption key from the control server in order to decrypt and re-assemble its own files. In order to test if it’s possible to abuse the file recovery operation to gain access to encryption key of the folders belonging to other nodes. I extracted the folderInfo request format from the agent code and set up another storage node as a target to test this idea. The result of the test was successful as shown in the following figure and it was possible to retrieve the AES-256 encryption key for the Folder Id “1099869693336”. This could enable an attacker who has set up an contributing storage node to decrypt the fragments that belong to other cloud users.

One possible explanation for the above file recovery code, could be that the node first downloads its own file fragments from remote endpoints (using an endpoint list received from the control server) and then retrieves the required folder encryption key from the control server in order to decrypt and re-assemble its own files. In order to test if it’s possible to abuse the file recovery operation to gain access to encryption key of the folders belonging to other nodes. I extracted the folderInfo request format from the agent code and set up another storage node as a target to test this idea. The result of the test was successful as shown in the following figure and it was possible to retrieve the AES-256 encryption key for the Folder Id “1099869693336”. This could enable an attacker who has set up an contributing storage node to decrypt the fragments that belong to other cloud users.

Conclusion:

While peer to peer storage systems have lower setup/maintenance costs, they face security threats from the storage nodes that are not under direct physical/remote control of the cloud controller system. Examples of such threats relate to the cloud’s client agent software and the cloud server’s authorisation control, as demonstrated in this post. While analysis of the data encryption and redundancy in the peer to peer storage system would be an interesting future research topic, we hope that the findings from this research can be used to improve the security of various distributed storage systems.