-1 – Pre-Intro

When looking at heap exploit tutorials most of the time I found myself lacking knowledge on the actual implementation and, soon, had the urge of knowing how it’s allocated and freed and why it’s done that way, memory wise.

-0.9 – ptmalloc2

The best source of knowledge with regards to the implementation of the heap is itself, the source code. Do not fear it, thankfully it is widely commented!

You can get (and I totally encourage you to do so) the source code from here. Having the file glibc-2.25/malloc/malloc.c open while reading this intro will get you into all the nifty details that I will skip here for the sake of simplicity and keeping in line with an “introductory” post.

1 – Intro

You will see that most old resources based on heap exploitation are based on Doug Lea’s Malloc. For this “painless” intro we are going to focus on the most commonly used ptmalloc2 implementation, which implements threads and is also based on the notorious dlmalloc (Doug Lea Malloc).

1.1 – ptmalloc2 Memory Management

This section makes use of human-readable, l33t-graph style, on memory structures and how they take place into managing the memory on the heap.

The heap is managed through different subsets of in-memory structures that help keep the memory in the heap as tidy as possible. One thing to keep in mind is that the heap grows in the opposite direction to the stack (the heap grows from smaller to bigger addresses – e.g. From 0x00000000 to 0xFFFFFFFF.)

The following is a simple representation of a program’s memory layout in Linux.

+--- Heap grows to bigger positions | | +-+-+-+-+-+-+ 0x00000000 V | CODE | +-----------+ | HEAP | | | +-----------+ | | A | | | +-----------+ +---- Stack grows to lower positions | STACK | +-----------+ 0xFFFFFFFF

1.1.1 – Arena

In ptmalloc2’s implementation, an Arena is the place for an application to store per-thread heaps. Ideally, one would think that each thread should have it’s own Arena but we move away from this idea as soon as we think of programs, such as the Apache Web Server, which has heavy multi-threading. This requires an insane amount of Arenas with each Arena having several heaps. This is why the implementation limits the number of Arenas by taking into account the cores present and the memory addresses the OS can map (32/64 bits):

malloc/malloc.c:1750

#define NARENAS_FROM_NCORES(n) ((n) * (sizeof (long) == 4 ? 2 : 8))

malloc/arena.c:906

... int n = __get_nprocs (); if (n >= 1) narenas_limit = NARENAS_FROM_NCORES (n); else /* We have no information about the system. Assume two cores. */ narenas_limit = NARENAS_FROM_NCORES (2); ...

1.1.2 – Top

When you allocate new chunks, top (the term given to free memory waiting to be allocated) always stays on top. Anything that goes beyond the top is called “the wilderness”. Top can grow, or shrink, depending on how much space is needed in the Arena. This is why on the first call to an allocation, the size of Top will be 0, which will force the extension to allocate memory on the first call to malloc.

So after three mallocations we should have the memory that looks like:

+---HEAP GROWS UPWARDS | | +-+-+-+-+-+-+ | | MALLOC 1 | | +-----------+ | | MALLOC 2 | | +-----------+ | | MALLOC 3 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

Easy, right?

1.1.3 – Bins

Bins are in-memory double linked structures that keep track of all the freed chunks. When a chunk is set free, its address gets added to a Bin array structure.

There are 128 bins: A single unsorted chunks bin and the remaining 127 bins. These are made of up 96 bins for small chunks and 21 for larger chunks: 1(Unsorted) + 96(Small chunks) + 31(Large chunks) = 128(Bins).

The main difference between the smaller and larger chunks is the following: When large chunks are being freed, they are stored by size, in their respective bins. Smaller chunks are stored in bins that hold freed chunks of the same size, sorted by when they were freed.

In the following scenario we are going to allocate 6 chunks of the same size (for example 256 bytes, so they are small but not a fastchunk) which will set the heap as follows:

+---HEAP GROWS UPWARDS | Bins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | | | +-----------+ +------------------------+ | | CHUNK 2 | | +-----------+ | | CHUNK 3 | | +-----------+ | | CHUNK 4 | | +-----------+ | | CHUNK 5 | | +-----------+ | | CHUNK 6 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

Now we free the chunk CHUNK 4 and then the chunk CHUNK 2:

+---HEAP GROWS UPWARDS | Bins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | ADDRESS OF FREESPACE 4 | (1)free | +-----------+ +------------------------+ | |FREESPACE 2 | (2)free | ADDRESS OF FREESPACE 2 | (2)free | +-----------+ +------------------------+ | | CHUNK 3 | | +-----------+ | |FREESPACE 4 | (1)free | +-----------+ | | CHUNK 5 | | +-----------+ | | CHUNK 6 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

As you can see, since both chunks are of the same size, they are stored according to when they were freed.

Now we are going to free CHUNK 3 from the previous scenario:

...

|FREESPACE 2 |

+-----------+

| CHUNK 3 | <-- going to be freed. kthxbye

+-----------+

|FREESPACE 4 |

...

Due to the implementation that states that there must be no adjacent free chunks, FREESPACE 2, FREESPACE 4 and the newly freed CHUNK 3 and FREESPACE 3 are going to be merged into one big chunk. Now the heap should look like:

+---HEAP GROWS UPWARDS | Bins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | ADDRESS OF FREECHUNK 2 | | +-----------+ +------------------------+ | |FREECHUNK 2| | | | | | | | | | | | | | +-----------+ | | CHUNK 5 | | +-----------+ | | CHUNK 6 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

This happens because when a chunk is freed, it sets its in-use bit to 0, then it will first look if the next or previous chunks are free and if so, it will merge them into one and will update the Bins array to match the current state of freed chunks. We will see programmaticaly how this is done later in this post.

1.1.4 – Fastbins

A Fastbin’s maximum size chunk is defined by:

#define MAX_FAST_SIZE (80 * SIZE_SZ / 4)

Where SIZE_SZ is 8 on 64bit systems and 4 on 32bit systems so, MAX_FAST_SIZE is 160 bytes and 80 bytes respectively. This means that any freed chunk smaller than MAX_FAST_SIZE will be first stored in the Fastbins structure.

Now this is a tricky one so read carefully: “[Fastbins] is an array of lists holding recently freed small chunks” single-linked and stored in “LIFO” .

Even trickier, when small chunks eligible for the Fastbin structure are freed, they still keep their in-use bit set. So basically, this Fastbin structure works like a cache for small chunks. This decision is made for performance reasons but adds to heap fragmentation if subsequent small chunks are allocated right after freeing into a Fastbin.

In the following scenario we allocated the following:

+---HEAP GROWS UPWARDS | Fastbins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | L1 | L2 | L4 | ...|L128| | +-----------+ +------------------------+ | | CHUNK 2 | | +-----------+ | |FASTCHUNK1| | +-----------+ | | CHUNK 3 | | +-----------+ | |FASTCHUNK2| | +-----------+ | | CHUNK 4 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

Now let’s cause some havoc, we are going to free FASTCHUNK 1 and FASTCHUNK 2:

+---HEAP GROWS UPWARDS | Fastbins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | L1 | L2 | L4 | ...|L128| | +-----------+ +------------------------+ | | CHUNK 2 | |FF2 | | +-----------+ +----+ | |FASTFREE 1 | |FF1 | | + + +----+ | | CHUNK 3 | | + + | |FASTFREE 2 | | +-----------+ | | CHUNK 4 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

And then let’s free CHUNK 3:

+---HEAP GROWS UPWARDS | Fastbins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | L1 | L2 | L4 | ...|L128| | +-----------+ +------------------------+ | | CHUNK 2 | |FF2 | | +-----------+ +----+ | |FASTFREE 1 | |FF1 | | + + +----+ | |FREECHUNK 3| | + + | |FASTFREE 2 | | +-----------+ | | CHUNK 4 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

If like me, right now you’d be asking ‘why are the three freed chunks not merged together?’

This happens because of the fastchunks keeping their in-use bit set because they are stored in the Fastbin structure and not in a normal Bin. The implementation won’t merge all the free spaces and will leave fragmentation. Also, as mentioned above, the implementation states that Fastbins are stored in LIFO (Last Input First Output), hence the FF2 stored on top of FF1 again for speed reasons. Now, for a deeper look and as a proof of concept let’s see all of this in a program:

As we can see, at the end, malloc5 gets allocated in the old malloc3 pointer instead of coalescing everything into a big free chunk and then allocating malloc5 which effectively messes with our memory (and possible future heap sprays/heap-fengshui).

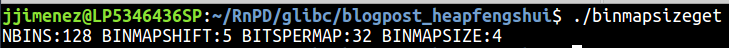

1.1.6 – Binmap

We will not go into detail with this structure as it only adds to the mess that by now, you can see the heap gets into (heap is heaps difficult, man!).

The Binmap is a bitvector one index array that stores if a Bin (of any type, Fast, Unorderd, Large, etc.) is empty or not so it can be skipped when searching for allocated or freed chunks. The size of this array is 16 bytes so it can hold four 32bit unsigned integers which effectively holds 128 (32*4) bits (the number of total Bins that the ptmalloc2 holds) – unsigned int binmap[BINMAPSIZE]; // BINMAPSIZE = 128/32=4; 4*4 = 16 (thanks to Andrey Igorevich for this clarification).

The following code (binmapsizeget.c in the tgz file) explains visually these last words and is just for the really nerdy out there that at this point haven’t quit and want to know more :) congrats!

1.1.7 – Unsorted chunks

Now take everything that you have read about free and allocations on this blogpost and add a queue to it. This is the Unsorted chunks bin (the bin at index 1 out of the 128 bins).

This queue is used (again) for speed purposes since, having a queue of recently freed chunks before storing these into their respective Bins saves a few instructions from being done. e.g. finding the right bin and then storing its address and then double linking on Bins or single linking on Fastbins. As you can see, it saves a lot of hassle for the implementation.

1.1.8 – A chunk and what revolves around

Why did I leave this for the end you might ask? Well, I wish I did the same when looking into memory management structures because it can get hard trying not to lose focus from the big picture.

1.1.8.1 – An allocated chunk

This is what an allocated chunk in the heap’s structure looks like:

+-+-+-+-+-+-+-+-+-+-+-+-+ <-- Chunk start | PREV_SIZE OR USER DATA| (1) +-----------------+-+-+-+ | CHUNK SIZE |A|M|P| (2) (3) +-----------------+-+-+-+ | USER DATA | (4) | | | - - - - - - - -| <-- End of chunk. Next chunk start (5) |PREV_SIZE ORUSER DATA| (6) +-----------------------+

An allocated chunk consists of 3 big parts. Two of them being 8 byte and 4 byte headers on 64bit/32bit systems respectively. The third chunk is composed of user data where the size is calculated by rounding it to the next 16 byte padded length on 64bit systems (next 8 byte padded size on 32 bit).

From now on in this section, we are going to reference everything on a 64bit architecture, translating it to 32bit in most of the cases a N/2 operation.

The first part (1) consists of the chunk start header which is 8 bytes. This is an overlapping part of the previous chunk. If the previous chunk is in use this part will hold the previous last 8 bytes of data and if the previous chunk is free, it will hold the previous’ chunk size:

+-+-+-+-+-+-+-+-+-+-+-+-+ <-- Chunk start | PREV_SIZE OR USER DATA| (1) +-----------------------+

The second part (2)(3) consists of another 8 bytes holding the size of the allocated chunk (2) padded to 16 bytes, as mentioned before. Thanks to all the sizes being 16 byte padded the last 3 bits are always unused regarding size (have no meaning for the size) and so these can be used as properties (3): Non-main Arena bit | MMAPed bit | Previous in-use bit. In our case the most important part and focused on exploiting these, is the Previous in-use bit, which depends on, as the name says, the previous chunk being used or not (not used = free):

+-----------------+-+-+-+ | CHUNK SIZE |A|M|P| (2) (3) +-----------------+-+-+-+

The last and third part (4)(5)(6) contains the user data. Since the chunk is allocated and in use, the user data (4) overlaps with the next chunk’s (6) start header but, the end of this chunk is actually at (5):

+-----------------------+ | USER DATA | (4) | | | - - - - - - - -| <-- End of chunk. Next chunk start (5) |PREV_SIZE ORUSER DATA| (6) +-----------------------+

1.1.8.2 – A FREE CHUNK

A freed chunk consists of 4 parts. Two of them stay the same which are the first 16 bytes (8 bytes for chunk start and next 8 bytes for chunk size). Now that this chunk is free, two new parts (1)(2) will be written into this chunk and one into the next chunk (3). The forward pointer (1) stores the address of the next free chunk in the list and the back pointer (2) saves the address of the previous free chunk in the list, if any. Lastly the PREV_SIZE of the next chunk will be set to this chunk’s CHUNK SIZE.

+-+-+-+-+-+-+-+-+-+-+-+-+ <-- Chunk start | PREV_SIZE OR USER DATA| +-----------------+-+-+-+ | CHUNK SIZE |A|M|P| +-----------------+-+-+-+ | FORWARD POINTER(FD) | (1) | BACK POINTER(BK) | (2) | - - - - - - - -| <-- End of this chunk. Next chunk start. | PREV_SIZEOR USER DATA| (3) +-----------------------+

Remember the part where two or more chunks were merged because of being adjacent in memory? These headers are the ones used for this task:

+---HEAP GROWS UPWARDS | Bins | +-+-+-+-+-+-+ +------------------------+ | | CHUNK 1 | | ADDRESS OF FREESPACE 4 | | +-----------+ +------------------------+ | |FREESPACE 2| | ADDRESS OF FREESPACE 2 | | +-----------+ +------------------------+ | | CHUNK 3 | <-- about to be set free | +-----------+ | |FREESPACE 4 | | +-----------+ | | CHUNK 5 | | +-----------+ | | CHUNK 6 | | +-----------+ | | TOP | | | | V | | +-----------+ --- WILDERNESS ---

At the moment of freeing CHUNK 3, the implementation checks that CHUNK’s 3 previous in-use bit is not set, meaning that the chunk right behind CHUNK 3 is free so it goes to FREESPACE’s 2 Forward Pointer and sees that there is another free chunk at:

FREESPACE2->FD == FREESPACE4

And as we know, there can be no two adjacent freed normal chunks, so it proceeds to merge all of them into one big chunk. During merging operations the unlink macro is called several times and it is one of the most abused parts of code when exploiting the heap.

1.1.8.3 – the unlink macro

Breakups were never easy, but they set you free and so does the unlink macro by helping to take a normal chunk out of a Bin (remember, an array of freed chunks) and merge these in their coalescing free space:

malloc/malloc.c:1378

/* Take a chunk off a bin list */

#define unlink(AV, P, BK, FD) { \

FD = P->fd; \

BK = P->bk; \

if (__builtin_expect (FD->bk != P || BK->fd != P, 0)) \

malloc_printerr (check_action, "corrupted double-linked list", P, AV);\

else { \

FD->bk = BK; \

BK->fd = FD; \

if (!in_smallbin_range (chunksize_nomask (P)) \

&& __builtin_expect (P->fd_nextsize != NULL, 0)) { \

if (__builtin_expect (P->fd_nextsize->bk_nextsize != P, 0)\

|| __builtin_expect (P->bk_nextsize->fd_nextsize != P, 0))\

malloc_printerr (check_action, \

"corrupted double-linked list (not small)", \

P, AV); \

if (FD->fd_nextsize == NULL) { \

if (P->fd_nextsize == P) \

FD->fd_nextsize = FD->bk_nextsize = FD; \

else { \

FD->fd_nextsize = P->fd_nextsize; \

FD->bk_nextsize = P->bk_nextsize; \

P->fd_nextsize->bk_nextsize = FD; \

P->bk_nextsize->fd_nextsize = FD; \

} \

} else { \

P->fd_nextsize->bk_nextsize = P->bk_nextsize; \

P->bk_nextsize->fd_nextsize = P->fd_nextsize; \

} \

} \

} \

}

Frightening? First focus on the following code, this is one of the most abused parts from the old dlmalloc implementation as it had not as many security checks as ptmalloc2 implementation:

malloc/malloc.c:1378

...

#define unlink(AV, P, BK, FD) { \

FD = P->fd; \

BK = P->bk; \

...

FD->bk = BK; \

BK->fd = FD; \

...

It is setting the next chunk’s (FD = P->fd) Back Pointer (FD->bk) to this chunk’s (P) Back Pointer (FD->bk = P->bk). Then, it does the symmetric operation with the previous chunk’s Forward Pointer by setting it to this chunk’s forward pointer (BK->fd = FD). Note that the following graphs are structures in a Bin (id est, already free chunks). So, graphicl33tly

+-------+ +-------+ +-------+ |CHUNK 1| |CHUNK P| |CHUNK 3| +-------+ +-------+ +-------+ | FD |-->| FD |-->| FD | +-------+ +-------+ +-------+ | BK |<--| BK |<--| BK | +-------+ +-------+ +-------+

And this is how unlink finally leave the Bins array structure.

,----------. ,>+-------+ | +\-----/+ `>+-------+ | |CHUNK 1| | |C\UNK/P| |CHUNK 3| | +-------+ | +--\-/--+ +-------+ | | FD |-´ | FX | | FD | | +-------+ +--/-\--+ +-------+ | | BK | | /BK \ | ,>| BK | | +-------+ +/-----\+ | +-------+ `----------------------+

Now before following on with the rest of the code, we are going to quickly introduce…

1.1.8.4 – A LARGE FREE CHUNK

As mentioned in the beginning of the Bins section, larger chunks are sorted by size as well. This is done by double linking them by size with two new headers: fd_nextsize and bk_nextsize. These names are highly misleading because these aren’t size values, these are actually pointers to the next bigger(1) free chunk and to the previous smaller(2) free chunk.

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ <-- Chunk start | PREV_SIZE OR USER DATA | +---------------------------------+-+-+-+ | CHUNK SIZE |A|M|P| +---------------------------------+-+-+-+ | FORWARD POINTER(FD) | | BACK POINTER(BK) | | NEXT BIGGER POINTER (fd_nextsize) | (1) | PREVIOUS SMALLER PTR(bk_nextsize) | (2) | - - - - - - - - - - - - - | <-- End of this chunk. | PREV_SIZEOR USER DATA| +---------------------------------------+

1.1.8.5 – THE UNLINK MACRO FOR BIG BOYS

Coming back to the unlink macro, the following code is checking if the currently freed chunk is a large chunk (1) and that there are no larger chunks than this by checking that P->fd_nextsize is null (2). If this is the case it will do nothing as there is nothing to sort out regarding size since this was the biggest one in the list. Otherwise, it will first check that the double linked list is not corrupted (3) by checking that it is redundantly correct and, if it is secure to follow, it will get into the routine of unlinking taking into account the size(A).

malloc/malloc.c:1386

...

(1) if (!in_smallbin_range (chunksize_nomask (P)) \

(2) && __builtin_expect (P->fd_nextsize != NULL, 0)) { \

(3) if (__builtin_expect (P->fd_nextsize->bk_nextsize != P, 0)\

|| __builtin_expect (P->bk_nextsize->fd_nextsize != P, 0))\

malloc_printerr (check_action, \

"corrupted double-linked list (not small)", \

P, AV); \

...(A)

Now if the next chunk’s next bigger free chunk is the last one (PD->fd->fd_nextsize == NULL) (1), another check is done to see if the chunk we are freeing is the last one, (2) in which case the bk_nextsize and fd_nextsize pointers will point to itself (3). If the previous if condition(2) failed, it means that the next chunk is the biggest one and so it will proceed to set all pointers to the next free chunk (4). If the first check (1) failed, it means that the chunk to be freed has a chunk bigger ahead and a chunk smaller behind so it will just proceed with the normal unlink procedure (5) like we have seen before for setting the FD and BK chunks’ fd and bk pointers.

malloc/malloc.c:1393

(A)...

(1) if (FD->fd_nextsize == NULL) { \

(2) if (P->fd_nextsize == P) \

(3) FD->fd_nextsize = FD->bk_nextsize = FD; \

else { \

(4) FD->fd_nextsize = P->fd_nextsize; \

(4) FD->bk_nextsize = P->bk_nextsize; \

(4) P->fd_nextsize->bk_nextsize = FD; \

(4) P->bk_nextsize->fd_nextsize = FD; \

} \

} else { \

(5) P->fd_nextsize->bk_nextsize = P->bk_nextsize; \

(5) P->bk_nextsize->fd_nextsize = P->fd_nextsize; \

} \

...

If you watch closely at the unlink macro at (3) or (5) you could think: What will happen if the chunk I am about to free is the smallest large chunk? It would happen that it will not have a bk_nextsize set and would do a NULL pointer reference or even use uninitialised memory… This little problem made me go deeper into the malloc.c code and found this little piece.

malloc/malloc.c:3622

/* Avoid removing the first entry for a size so that the

skip list does not have to be rerouted. */

if (victim != last (bin)

&& chunksize_nomask (victim)

== chunksize_nomask (victim->fd))

victim = victim->fd;

remainder_size = size - nb;

unlink (av, victim, bck, fwd);

...

So again, for performance reasons, freeing the first chunk of the list, (hopefully) will not happen because of these lines of code.

2 – Conclusion and PoC’s

The heap is hard to maintain, especially in this implementation due to being threaded. Hopefully this article helps you understand the process of making a chunk free from its allocation and which structures play in the game of freeing chunks.

For this post there was an exploit scenarios section that I deleted due to lengthiness and “denseness” of it and so, will be kept for a future entry. But it’s good to mention that most of the time and as per my research while writing this, exploits in the heap happen to either a buffer overflow which will overwrite another chunk in memory (being careful to bypass the redundancy checks, which is possible), by calling free twice (double free bugs) on the same chunk of memory where lots of preconditions are to be met or, by 1 byte out of bound writes which would overwrite and change the next chunk’s PREV_INUSE bit and then tamper with the fake empty chunk. More on that for the next part!

Click here to obtain several PoC that show how the heap works in Linux: Github Repo.

The heap is a beautiful mess :)

If you find this useful, or notice i’ve introduced a glaring mistake, reach out to me. Javier at the domain name.