A few weeks ago my friend Zblurx pushed a PR to Impacket in which he implemented the Channel Binding Token computation based on code that was developed by ly4k for the ldap3 library. This PR allowed any tool relying on the ldap3 library to be able to connect to LDAP servers even if LDAP signing and LDAPS channel binding are enabled. Looking at the code I thought it would be easy to implement the same mechanism on other protocols such as MSSQL which I was already working on pushing as PRs on NetExec.

So I started implementing CBT for MSSQL, thinking it would only take a couple of hours… but it ended up taking two whole weeks! Along the way, I learned a bunch of interesting things, so I figured I’d write a short blog post to share the journey.

1/ About MSSQL Servers installation and configuration

First things first, how do you install an MSSQL server? Well Microsoft proposes multiple installers that you can get from the following page:

Some installers are for enterprises (and thus require a licence), some are for testing purposes such as the “Express” one that is free. Once the installer runs, you’ll have a fully working MSSQL database along with the SQLCMD.exe binary used to manage the database. Yup, by default you won’t have a GUI tool so if you need one you can either install your favourite GUI tool (hello there DBeaver) or the official tool from Microsot, SSMS.exe.

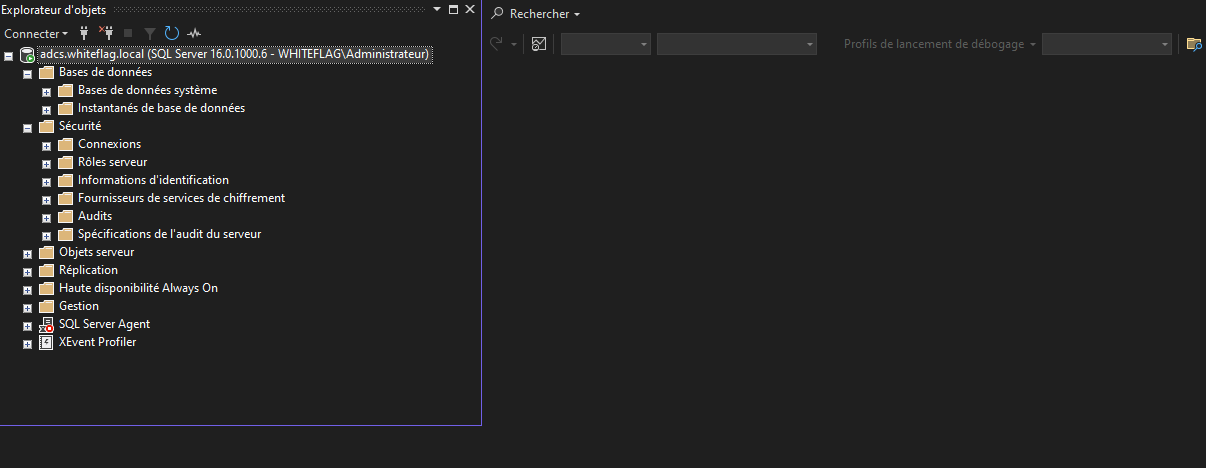

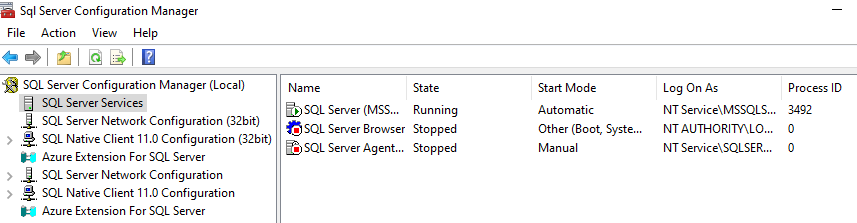

MSSQL, being a service, has a dedicated MMC console available also:

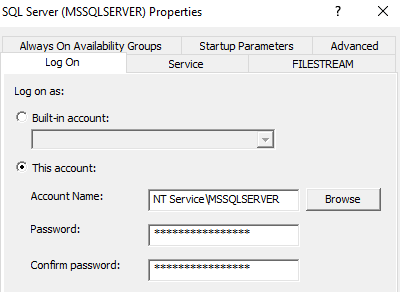

Multiple panels will let you configure pretty much whatever you want. For example, you can chose which account will run the database

This account can be a local account (in my lab it’s the MSSQLSERVER virtual account), or a domain account (if and only if your database needs to query information over your Active Directory). Keep in mind that whether it is a local or a domain account, you must restrict the account’s privileges as much as you can to prevent lateral/horizontal movement if the MSSQL server is compromised.

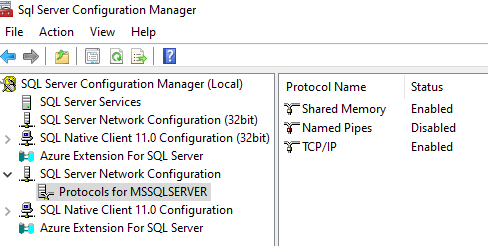

Others options will let you configure whether you want your database to be exposed on a TCP/IP port or via a named pipe (or both):

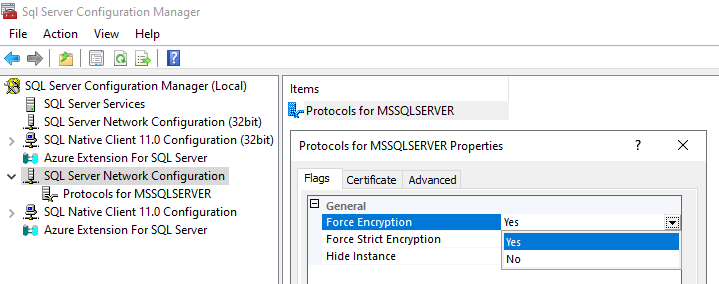

If you want your server to support TLS encryption:

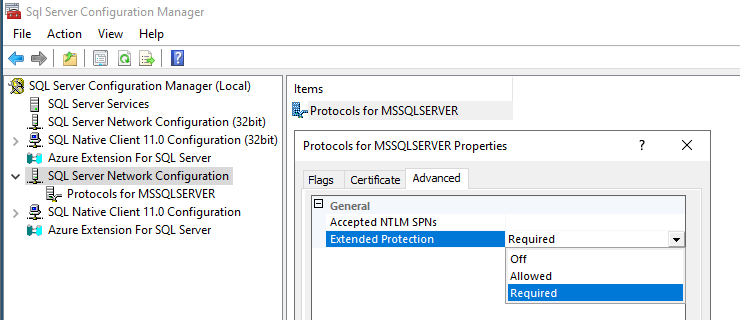

And if it should also support Extended Protection for Authentication (EPA) that, under the hood, means supporting Channel Binding Token:

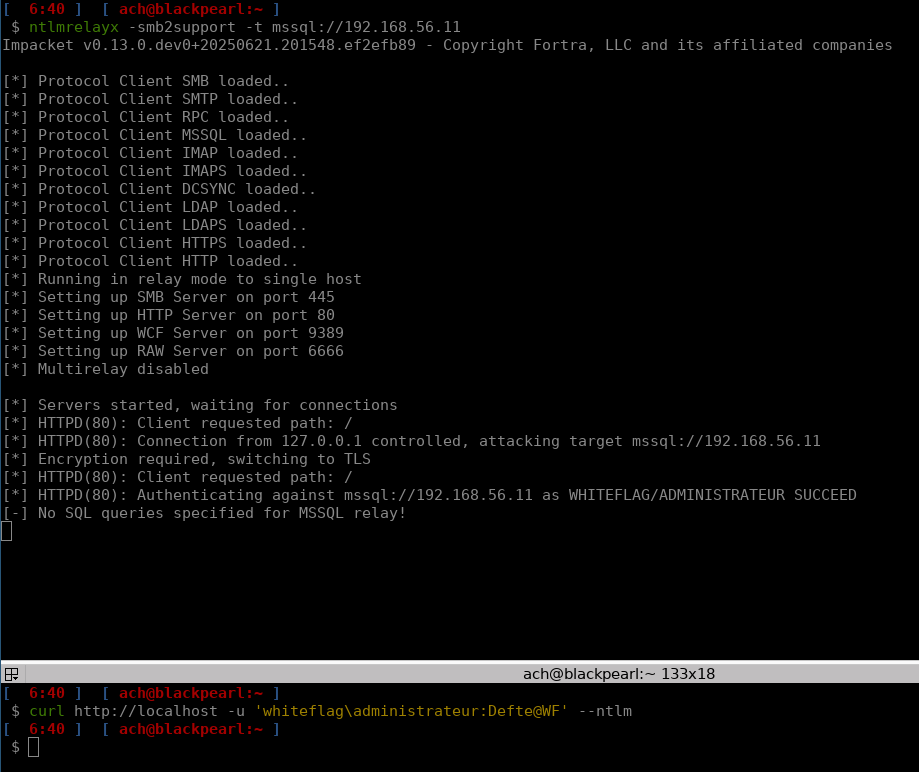

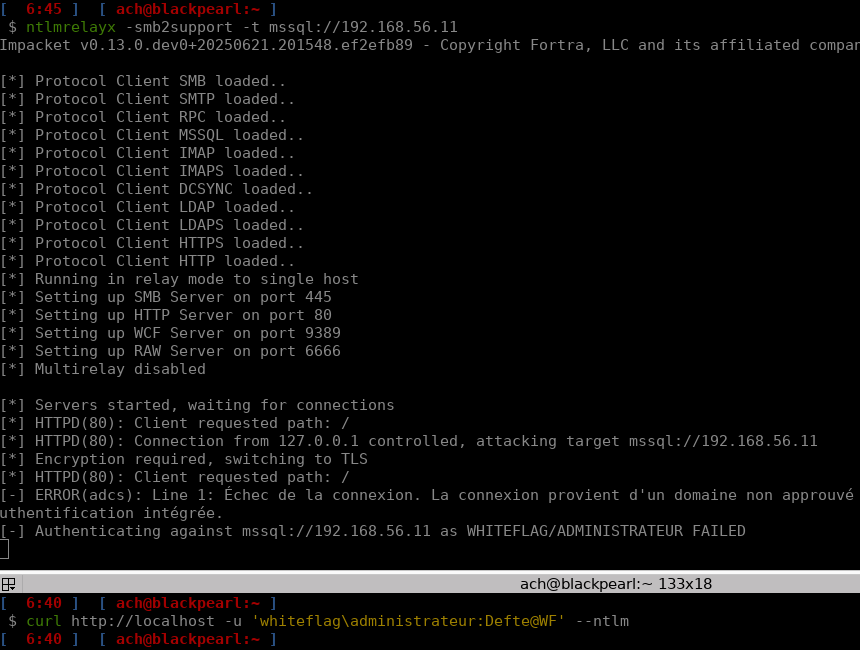

By default, a freshly installed MSSQL server is vulnerable to NTLM relay attacks, as you can see below:

You probably already know that there are two mechanisms that can prevent these attacks:

- NTLM signing (on unencrypted communications) which will sign (compute a hash from the packet itself) each NTLM packet and add the signature in the Message Integrity Code (MIC) field;

- Channel Binding (on encrypted communications) which will compute a binding token based on the TLS session established between the client and the server and store that value in the Channel Binding value of the NTLM repsonse.

That being said, MSSQL doesn’t support signing on unencrypted communications. As such, it is recommended to enforce both encryption and Extended Protection. These will effectively prevent any NTLM relay attacks:

But keep in mind that if you do, you’ll have to use tooling that supports Channel Binding as well… And that’s not the case for the MSSQLClient.py script from the Impacket toolkit. Using it while having EPA enabled will get you this message:

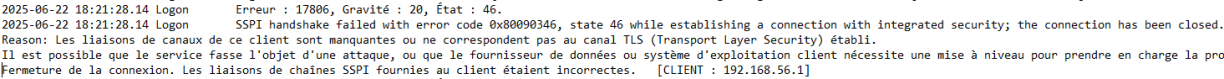

Connection error. The connection comes from a non approuved domain and cannot be used with integrated authenticationThat is a generic error that basically says, your authentication failed and it does not actually tell us what’s wrong with it… Is it the username? The password? Is the channel binding token missing? Helpfully, MSSQL server’s logs are much more detailed and can be found at the following path (adapt the <VERSION> depending on your MSSQL instance):

C:\\Program Files\\Microsoft SQL Server\\MSSQL<VERSION>.MSSQLSERVER\\MSSQL\\LogYou’ll see the following line:

Which translated says something like:

The binding token for that client is missing or does not match the established TLS link.

It is possible that the MSSQL service is under attack or that the client does not support extended protection.

Closing the connection. The SSPI binding token sent by the client does not match. So we know that the Channel Binding token is missing or is invalid and we’ll have to compute it. The question is, how?

2/ Integrating CBT into TDS.py

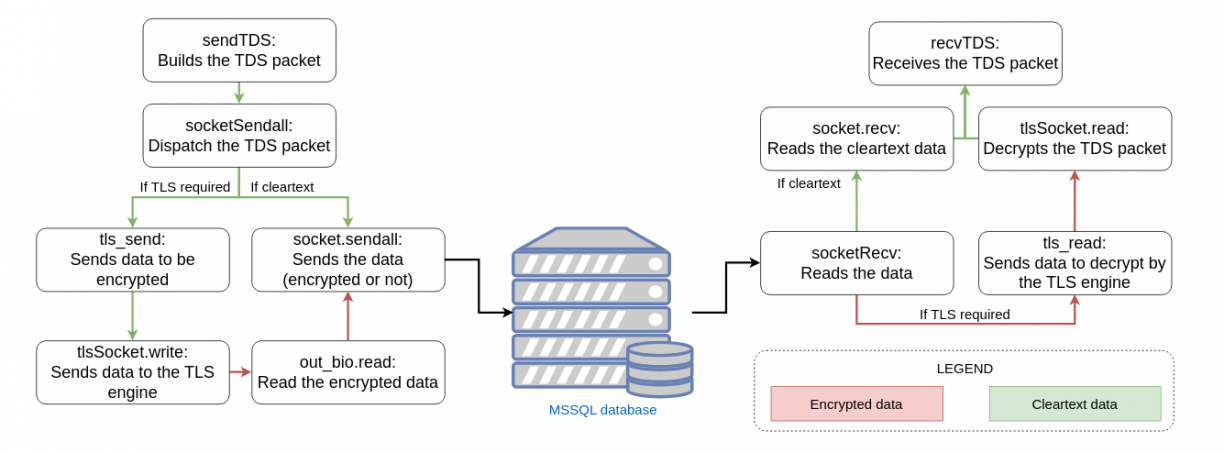

In the Impacket library, the MSSQL communication is handled via the TDS.py script. This one is huge, but looking at it we understand that MSSQL communications is not just about sending some credentials / commands to a server. Instead it relies on structured packets that are part of an application layer protocol called TDS. This protocol was initially designed and developed by Sybase for their own SQL database engine but was later bought by Microsoft for MSSQL. Looking at the code, we can see that two functions are used to:

- Send TDS packets (sendTDS):

def sendTDS(self, packetType, data, packetID = 1):

if (len(data)-8) > self.packetSize:

remaining = data[self.packetSize-8:]

tds = TDSPacket()

tds['Type'] = packetType

tds['Status'] = TDS_STATUS_NORMAL

tds['PacketID'] = packetID

tds['Data'] = data[:self.packetSize-8]

self.socketSendall(tds.getData())

while len(remaining) > (self.packetSize-8):

packetID += 1

tds['PacketID'] = packetID

tds['Data'] = remaining[:self.packetSize-8]

self.socketSendall(tds.getData())

remaining = remaining[self.packetSize-8:]

data = remaining

packetID+=1

tds = TDSPacket()

tds['Type'] = packetType

tds['Status'] = TDS_STATUS_EOM

tds['PacketID'] = packetID

tds['Data'] = data

self.socketSendall(tds.getData())- Receive TDS packets sent by the MSSQL server:

def recvTDS(self, packetSize = None):

if packetSize is None:

packetSize = self.packetSize

data = b""

while data == b"":

data = self.socketRecv(packetSize)

packet = TDSPacket(data)

status = packet["Status"]

packetLen = packet["Length"]-8

while packetLen > len(packet["Data"]):

data = self.socketRecv(packetSize)

packet["Data"] += data

remaining = None

if packetLen < len(packet["Data"]):

remaining = packet["Data"][packetLen:]

packet["Data"] = packet["Data"][:packetLen]

while status != TDS_STATUS_EOM:

if remaining is not None:

tmpPacket = TDSPacket(remaining)

else:

tmpPacket = TDSPacket(self.socketRecv(packetSize))

packetLen = tmpPacket["Length"] - 8

while packetLen > len(tmpPacket["Data"]):

data = self.socketRecv(packetSize)

tmpPacket["Data"] += data

remaining = None

if packetLen < len(tmpPacket["Data"]):

remaining = tmpPacket["Data"][packetLen:]

tmpPacket["Data"] = tmpPacket["Data"][:packetLen]

status = tmpPacket["Status"]

packet["Data"] += tmpPacket["Data"]

packet["Length"] += tmpPacket["Length"] - 8

return packetBoth these functions rely on the TDSPacket structure defined as:

class TDSPacket(Structure):

structure = (

('Type', '<B'), # The type of data that is going to be sent

('Status', '<B=1'), # The status (which can be a simple data packet or, for example, a reset connection one)

('Length', '>H=8+len(Data)'), # The size of the data that is sent

('SPID', '>H=0'), # This value is not used

('PacketID', '<B=0'), # The packet ID that is used to rebuild the entire data if multiple TDS packets are sent

('Window', '<B=0'), # This one is not used either

('Data', ':'), # The actual data that is sent to the MSSQL server

)This TDS structure makes sending data to a MSSQL server quite easy actually, all you need to do is to compute the data you want to send to the server and specify the length of that data in the respective fields.

One other interesting thing in the TDS.py script is the preLogin function. As you can see this function computes a PRE_LOGIN packet in which the client specifies some information such as its MSSQL version and the Encryption field which defines whether it supports TLS encryption or not:

def preLogin(self):

prelogin = TDS_PRELOGIN()

prelogin['Version'] = b"\x08\x00\x01\x55\x00\x00"

prelogin['Encryption'] = TDS_ENCRYPT_OFF

prelogin['ThreadID'] = struct.pack('<L',random.randint(0,65535))

prelogin['Instance'] = b'MSSQLServer\x00'

self.sendTDS(TDS_PRE_LOGIN, prelogin.getData(), 0)

tds = self.recvTDS()

return TDS_PRELOGIN(tds['Data'])Encryption’s field values can be:

# Encryption is available but off.

TDS_ENCRYPT_OFF = 0

# Encryption is available and on.

TDS_ENCRYPT_ON = 1

# Encryption is not available.

TDS_ENCRYPT_NOT_SUP = 2

# Encryption is required.

TDS_ENCRYPT_REQ = 3

# Certificate based authentication

TDS_ENCRYPT_CLIENT_CERT = 80These values imply that it is possible to not require TLS at all which is quite interesting. Scrolling down, you’ll find the login function:

def login(self, database, username, password='', domain='', hashes = None, useWindowsAuth = False):

if hashes is not None:

lmhash, nthash = hashes.split(':')

lmhash = binascii.a2b_hex(lmhash)

nthash = binascii.a2b_hex(nthash)

else:

lmhash = ''

nthash = ''

resp = self.preLogin()

# Test this!

if resp['Encryption'] == TDS_ENCRYPT_REQ or resp['Encryption'] == TDS_ENCRYPT_OFF:

LOG.info("Encryption required, switching to TLS")

# Switching to TLS now

ctx = SSL.Context(SSL.TLS_METHOD)

ctx.set_cipher_list('ALL:@SECLEVEL=0'.encode('utf-8'))

tls = SSL.Connection(ctx,None)

tls.set_connect_state()

while True:

try:

tls.do_handshake()

except SSL.WantReadError:

data = tls.bio_read(4096)

self.sendTDS(TDS_PRE_LOGIN, data,0)

tds = self.recvTDS()

tls.bio_write(tds['Data'])

else:

breakAs you can see, before any login occurs, the pre-login packet is sent. From the response of the server (specifically the Encryption field), the client will create a TLS context and handle the TLS handshake on its own. Until I got into this research subject I had never seen such behaviour until I realised that this mechanism, the one responsible for switching from a non-TLS communication to a TLS-protected one is actually the STARTTLS mechanism that is supported by some protocols such as SMTP and LDAP (for example). For the moment we’ll ignore it, but in a later part of this blogpost you’ll see it will be a pain in the ass!

Looking at the rest of the login function we will see the following:

# First a TDS authentication packet is instantiated

login = TDS_LOGIN()

# [...] A bunch of variables are set

# If the Windows Active Directory authentication is used then

if useWindowsAuth is True:

login['OptionFlags2'] |= TDS_INTEGRATED_SECURITY_ON

# We obtain a NTLMSSP_NEGOTIATE message

auth = ntlm.getNTLMSSPType1('','')

login['SSPI'] = auth.getData()

login['Length'] = len(login.getData())

# We send the NTLMSSP_NEGOTIATE message to the SQL server

self.sendTDS(TDS_LOGIN7, login.getData())

# We receive the response (the NTLMSSP_CHALLENGE) message

tds = self.recvTDS()

# From which we extract the server challenge

serverChallenge = tds['Data'][3:]

# That we use to compute the NTLMSSP_AUTHENTICATE message that we'll use to actually authenticate

type3, exportedSessionKey = ntlm.getNTLMSSPType3(auth, serverChallenge, username, password, domain, lmhash, nthash)

# We send the packet

self.sendTDS(TDS_SSPI, type3.getData())

# Receive the response from the server

tds = self.recvTDS()

self.replies = self.parseReply(tds['Data'])

# And if we get a TDS_LOGINACK_TOKEN response then we are authenticated and get access

if TDS_LOGINACK_TOKEN in self.replies:

return True

# Else we don't

else:

return False

Nothing fancy, that’s standard NTLM authentication. Now looking at Zblurx’s code for the LDAP protocol, we will see that the following function, which is used to compute the Channel Binding Token, has been added:

def generateChannelBindingValue(self):

# From: https://github.com/ly4k/ldap3/commit/87f5760e5a68c2f91eac8ba375f4ea3928e2b9e0#diff-c782b790cfa0a948362bf47d72df8ddd6daac12e5757afd9d371d89385b27ef6R1383

from hashlib import md5

# Ugly but effective, to get the digest of the X509 DER in bytes

peer_cert_digest_str = self._socket.get_peer_certificate().digest('sha256').decode()

peer_cert_digest_bytes = bytes.fromhex(peer_cert_digest_str.replace(':', ''))

channel_binding_struct = b''

initiator_address = b'\x00'*8

acceptor_address = b'\x00'*8

# https://datatracker.ietf.org/doc/html/rfc5929#section-4

application_data_raw = b'tls-server-end-point:' + peer_cert_digest_bytes

len_application_data = len(application_data_raw).to_bytes(4, byteorder='little', signed = False)

application_data = len_application_data

application_data += application_data_raw

channel_binding_struct += initiator_address

channel_binding_struct += acceptor_address

channel_binding_struct += application_data

return md5(channel_binding_struct).digest()Then the ntlm.getNTLMSSPType3 function was modified so that it included the CBT as well as the MIC values:

# channel binding

channel_binding_value = b''

if self._SSL:

channel_binding_value = self.generateChannelBindingValue()

# NTLM Auth

type3, exportedSessionKey = getNTLMSSPType3(

negotiate,

type2,

user,

password,

domain,

lmhash,

nthash,

service='ldap',

version=self.version,

use_ntlmv2=True,

channel_binding_value=channel_binding_value

)

# calculate MIC

newmic = hmac_md5(exportedSessionKey, negotiate.getData() + NTLMAuthChallenge(type2).getData() + type3.getData())

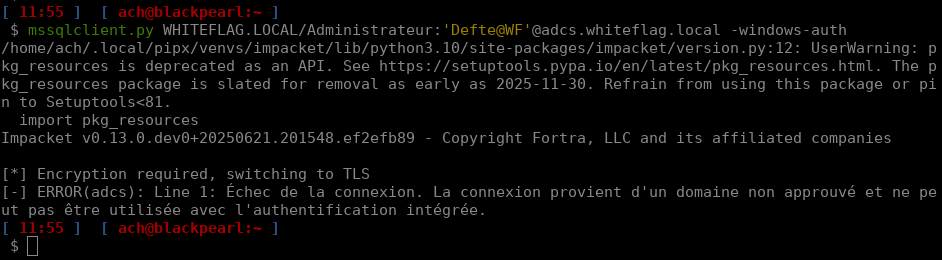

type3['MIC'] = newmicNaively enough, I thought copy/pasting these two snippets would be enough but on launching mssqlclient.py I realised that…

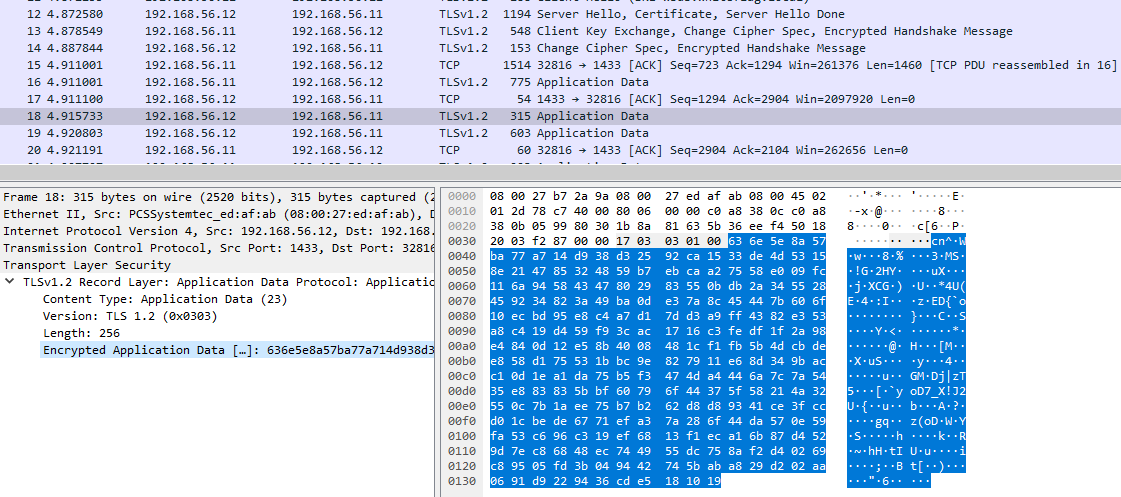

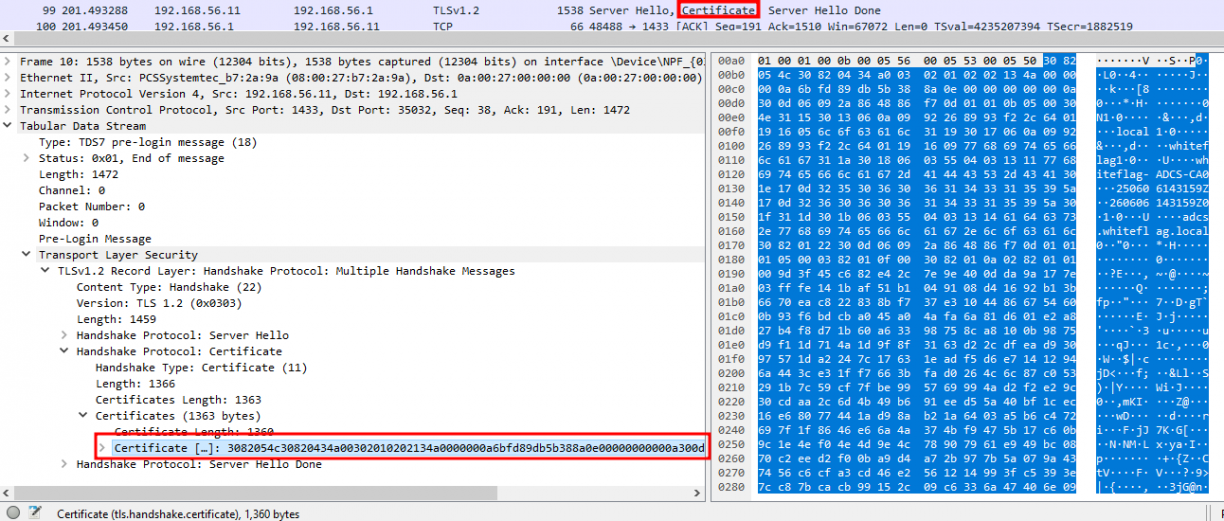

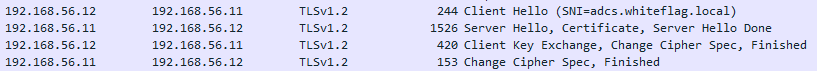

It was not working. I guess we’ll have to get our hands dirty, right? Question is, how do we get to know what’s going wrong? Well we know for sure that there is at least one official tool from the MSSQL toolkit that supports authentication with Channel Binding: SQLCMD.exe. But it communicates over an encrypted TLS tunnel:

So the first thing we’ll need to do is to downgrade our Windows Server TLS configuration so that we can actually see what’s going through it.

3/ Downgrading Windows security

There are two things that prevent us from inspecting the TLS traffic in which the NTLM authentication occurs:

- By default MSSQL servers are configured to rely on a self-signed certificate for which we can’t extract the private key;

- MSSQL servers prefer TLS 1.3 which relies on the Diffie-Hellman algorithm to exchange encryption keys meaning even if we can extract the private key, we won’t be able to decrypt TLS communication.

So let’s disable these to begin with!

- Configuring a real TLS certificate with an exportable private key:

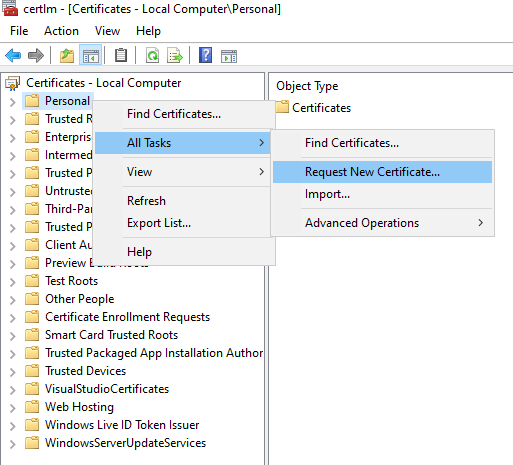

When dealing with certificates in an Active Directory environement, the easiest way to make sure that everything works correctly is to rely on the ADCS service. Thus you’ll need to install and configure the service with a valid Certificate Authority. Once done, using the certlm (certLocalMachine as opposed to certmgr which is related to your current user) you’ll be able to request the certificate by right-clicking on “Personal” > “Request New Certificate”:

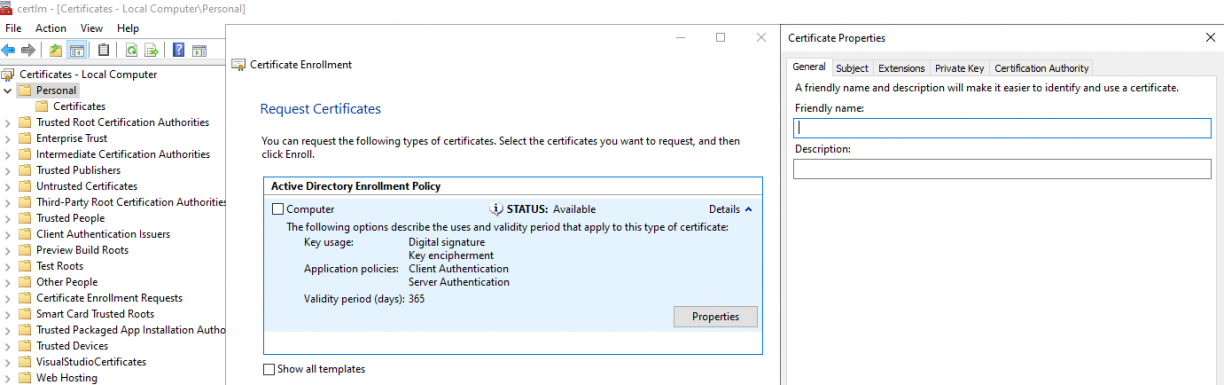

Click on next until you get the following panel that will allow requesting specific options about the private key:

This one is very important because that is where you’ll state that the private key bounded to that certificate can be exported:

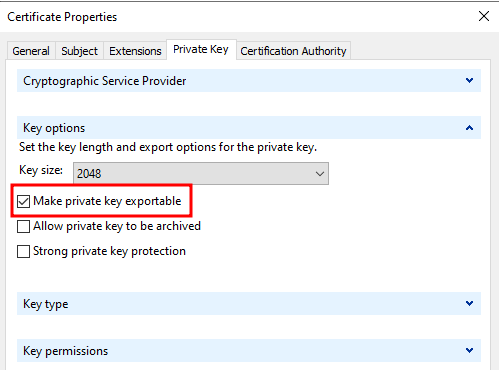

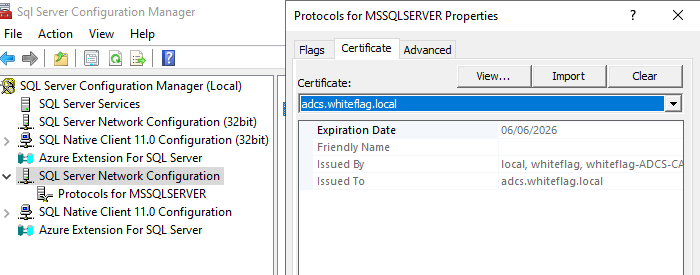

Once the certificate is obtained, you can configure your MSSQL server to use it via the import button on the MSSQL MMC:

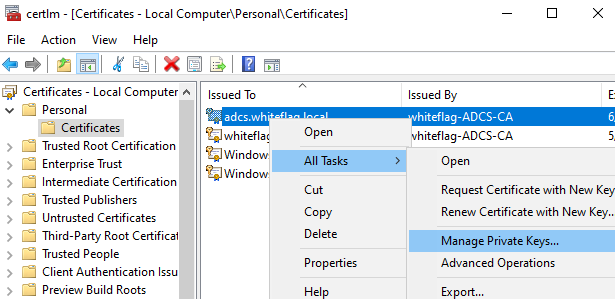

Finally, you’ll have to make sure that the private key can be read by the account running the MSSQL server. To do so, open certlm again, right click on the certificate we just requested, click on “All Tasks” then “Manage Private Keys”:

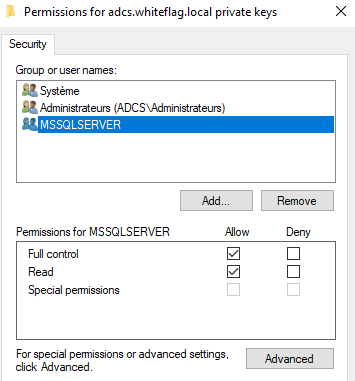

And now make sure the user that is running the MSSQL database can read it:

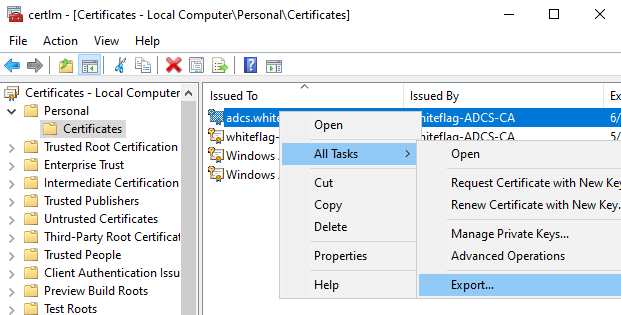

Once done, you can restart your MSSQL server so that it issues the certificate. You’ll also be able to export the private key by right clicking on the certificate, then “All Tasks” and finally “Export”:

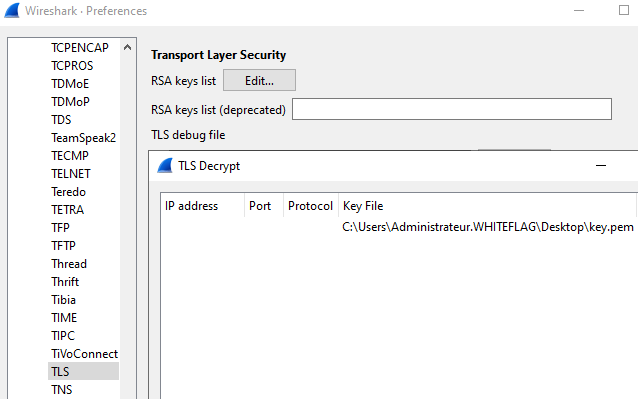

Last step is to configure Wireshark so that it uses that key to decrypt TLS traffic (Edit > Preferences > TLS):

Wireshark is ready to decrypt packets over the network. Let’s disable TLS 1.3.

- Disabling TLS 1.3

TLS 1.3 is really much more secure than any other TLS version for a simple reason: it relies on Diffie-Hellman to exchange the keys used to encrypt/decrypt the communications. That algorithm is entirely different from the usual RSA one because encryption/decryption keys are computed during the TLS handshake. That means that even if an attacker gets access to a certificate’s private key, they won’t be able to decrypt current and older messages.

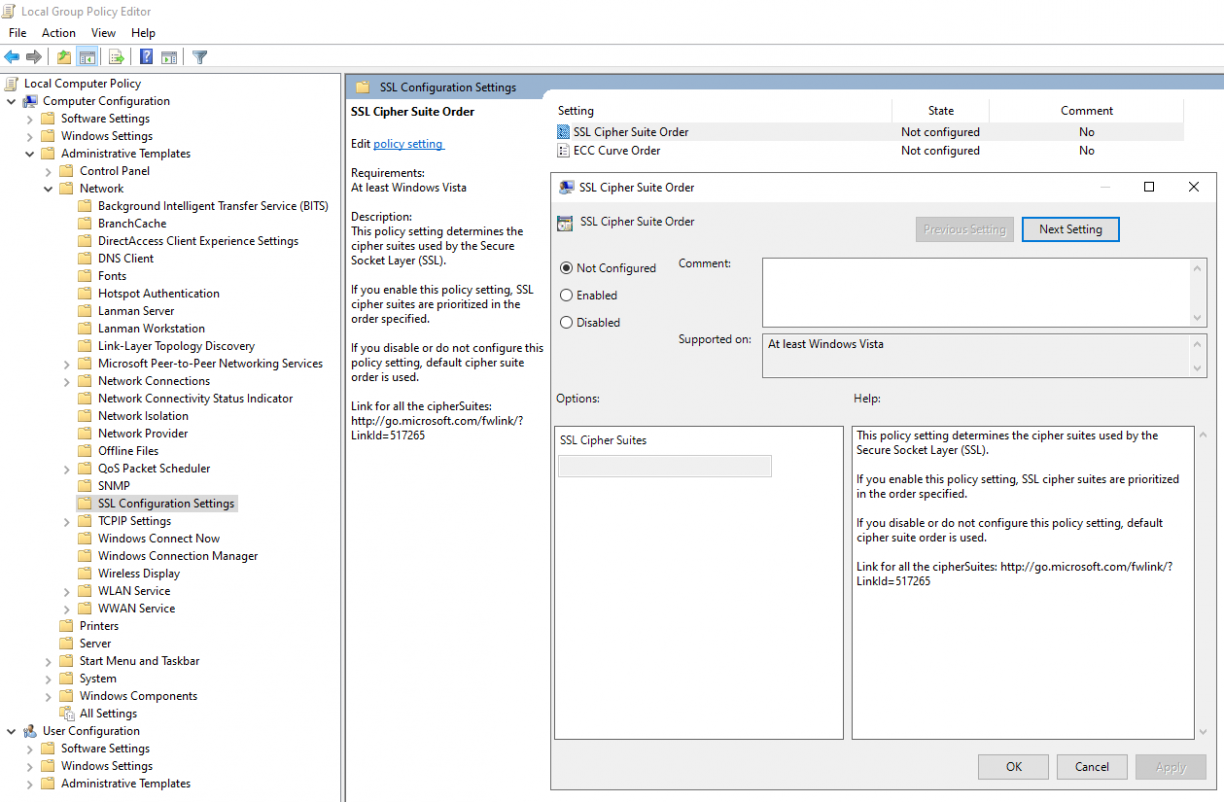

When it comes to privacy, that is actually great but when you want to introspect TLS messages it makes our life much harder. That’s why we’ll simply disable TLS 1.3 on our testing server. Now that looks complicated but it’s actually quite easy to do. Since TLS 1.3 doesn’t support ciphers that do not rely on the Diffie-Hellman exchange key protocol, we’ll have to configure our server to only support RSA ones. This can be done by modifying the following GPO:

Computer Configuration > Administrative Templates > Network > SSL Configuration Settings > SSL Cipher Suite Order

And providing TLS ciphers that only work with RSA, such as the following ones:

TLS_RSA_WITH_AES_256_CBC_SHA256,TLS_RSA_WITH_AES_128_CBC_SHA256Apply the updates via gpupdate:

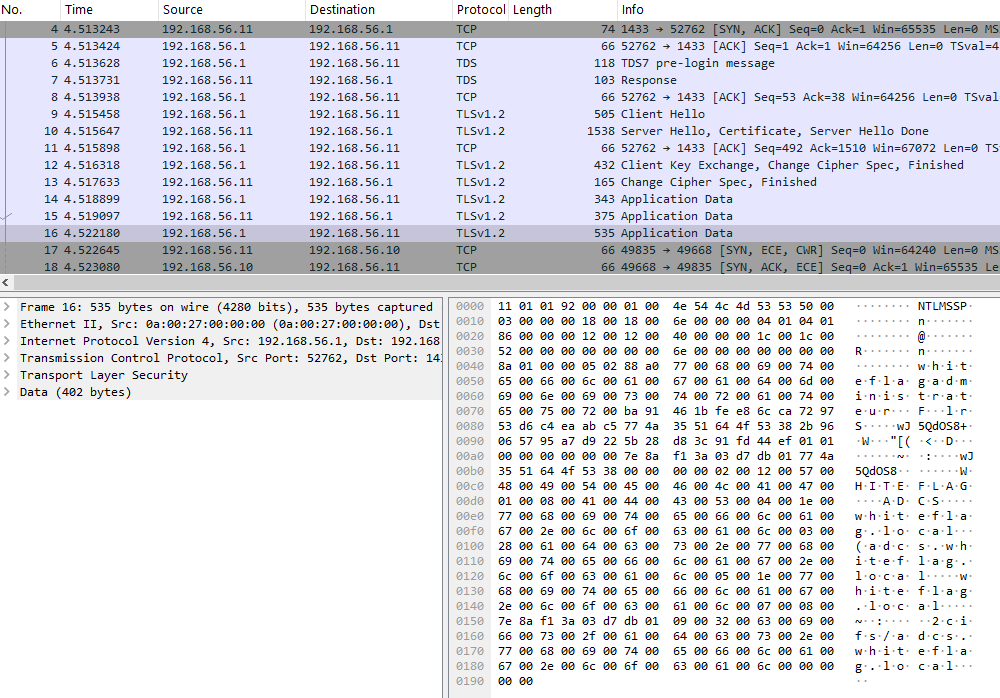

gpupdate /forceAnd here are our cleartext NTLM authentication messages:

4/ About NTLM, Channel Binding and MIC

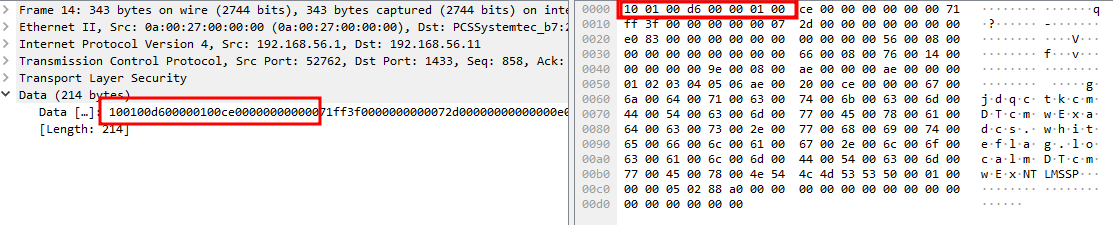

At this point we are able to obtain the cleartext NTLM messages used to authenticate ourselves from a legitimate tool (SQLCMD.exe) that does support Channel Binding. My idea was to inspect these packets in order to detect what was missing. Normally, Wireshark is able to decompose an NTLM packet and display all the values, but it doesn’t work in our case since the data is embedded inside the “Data” field in the TDS packet:

That led me to develop an NTLM parser that can extract all the values from a hexadecimal string passed as an argument, which I’ve included in the Appendix at the end of this post.

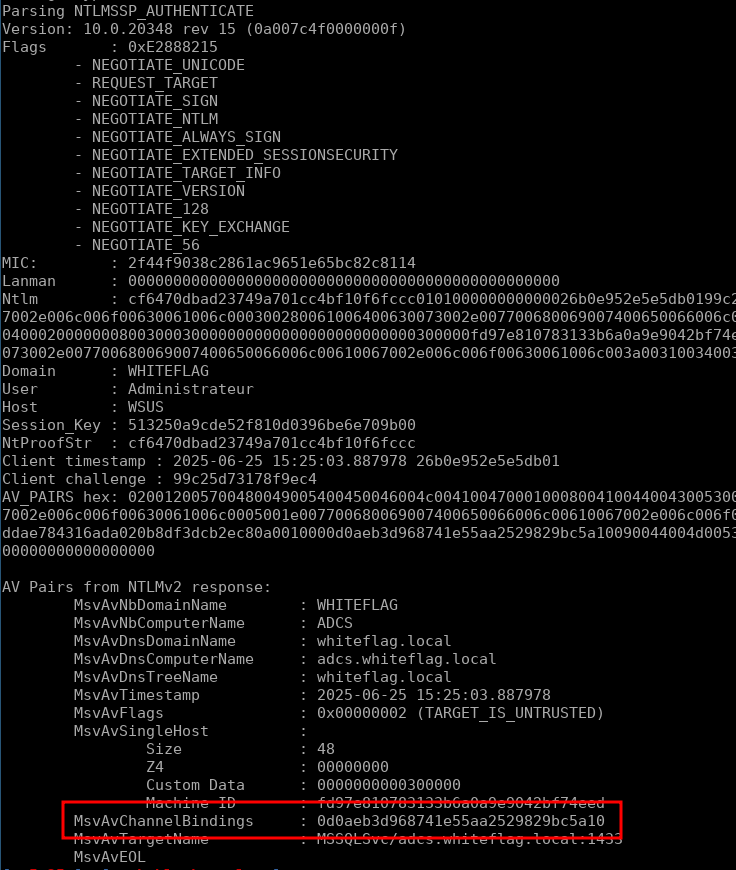

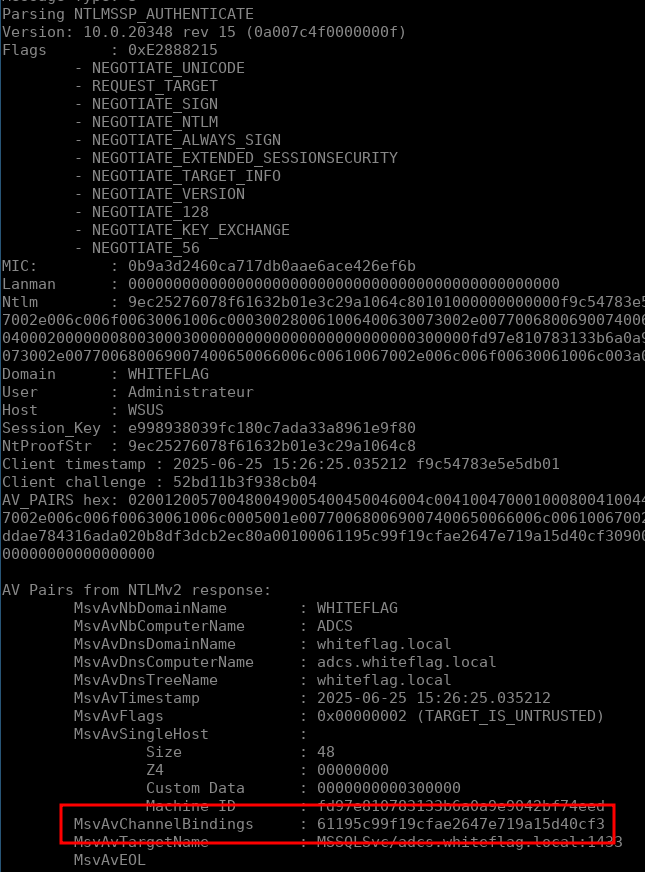

Using this script we’ll be able to dissect the three messages used by SQLCMD.exe to authenticate to the remote MSSQL server with Channel Binding but diving into the NTLM internals would make the blogpost too long and since it’s been explained very well in multiple blogposts I’ll just skip that part. Let’s jump directly to the most important part, the NTLMSSP_Authenticate packets. Running our NTLM parser on a NTLMSSP_Authenticate packet sent by SQLCMD.exe we get the following output:

As you can see this message contains quite a lot of information but most importantly, it contains:

- The NTLM response (ntlm field) in which we’ll find the encrypted challenge (that’s actually called the NtProofStr);

- The session key that will be used to sign packets if signing is activated;

- The MIC that is the signature of the actual NTLM packet.

Looking at the AV_PAIRS we’ll see that, as expected, the Channel Binding Token is supplied:

MsvAvChannelBindings : 0c237bcdc07178a5c801f2446e7ce6b9For a couple of days I struggled to understand what was missing since the code we wrote at the beginning of this blogpost should have been computing the correct CBT value by hashing the TLS certificate of the server and concatenating to the “tls-server-end-point” string. I wrote standalone python scripts to check that value, even bash scripts, but it wasn’t working… Until I realised that there was something really wrong. Let’s take a look at two different legitimate authentications from SQLCMD.exe:

- First authentication:

- Second authentication:

That’s weird, the CBT value is different, but it shouldn’t be since we are computing it from a certificate that is the same (the one we generated before). That means that somehow, the CBT is not computed from the TLS certificate of the MSSQL server but something else. So I dug until I realized that there is not a single way of computing a Channel Binding Token but three which are:

- tls-server-end-point:

This is the one used when connecting to LDAPS. The overall idea is simple, we extract the TLS certificate:

Create a SHA256 hash out of it, concatenate the result with a specific string and hash the entire thing using MD5. This CBT method always returns the same exact token value since the certificate shouldn’t change.

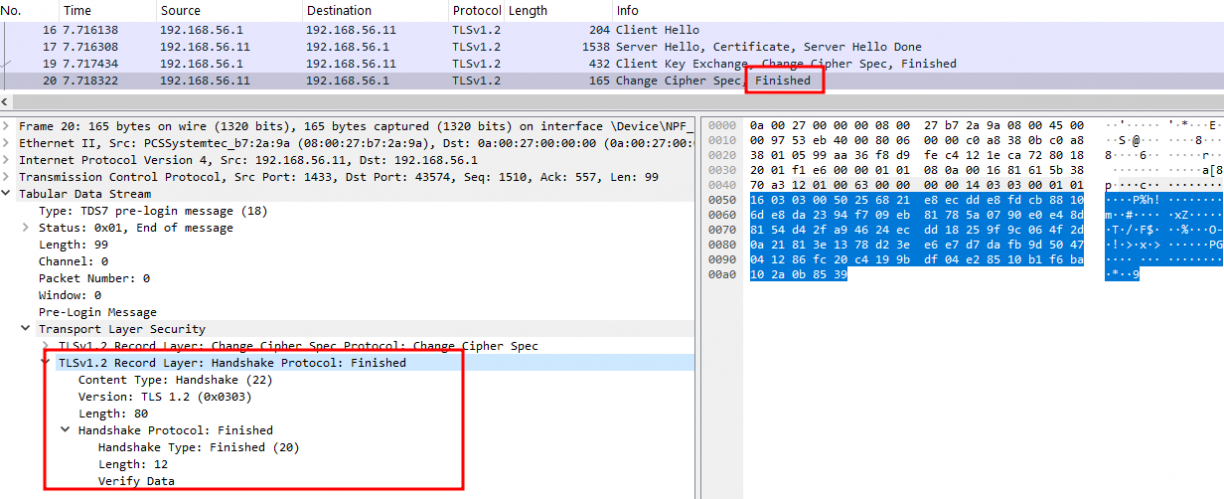

- tls-unique:

This CBT is computed from the “Finished” message which is sent by the server in the last TLS handshake packet:

- tls-exporter:

This one is actually much harder to explain as it is computed from:

- The master secret (or traffic secret) ;

- The handshake messages ;

- The exporter label ;

- A context value that is a random value.

From all of that, the following formula is used to compute the CBT via the a pseudorandom function (PRF):

tls-exporter = PRF(master_secret, label, context_value, length)As much as I’d love to tell you more about this, it actually goes beyond my own understanding, and we won’t need it so let’s just skip it.

At this point we know for sure that SQLCMD.exe does not compute the CBT token via the tls-server-end-point method as the CBT value does change after each authentication attempt.

That leaves us with two candidates: tls-exporter and tls-unique. Thing is, in the Microsoft universe, the component that handles all SSL/TLS computation is Schannel (which you might have already used when connecting to LDAP via certificate). Although Schannel does support SSL 2 and 3 as well as TLS 1.0 to 1.3, it only supports tls-exporter partially which implies that the CBT is computed via the tls-unique mechanism.

All we have to do now, is to find a way to get that value. Easy right… Actually not that easy. Indeed, before I PR’ed Impacket, the toolkit was using PyOpenSSL to handle the TLS layer. That library does not allow us to retrieve the tls-unique value. There is simply no way you can retrieve it with that library. However, the standard python SSL library does allow accessing it via a very simple line of code. That means we will have to rewrite the entire TLS stack of the TDS.py script and especially the STARTTLS mechanism. Let’s dig a little bit into how that thing works. Here is the code:

resp = self.preLogin()

if resp['Encryption'] == TDS_ENCRYPT_REQ or resp['Encryption'] == TDS_ENCRYPT_OFF:

LOG.info("Encryption required, switching to TLS")

ctx = SSL.Context(SSL.TLS_METHOD)

ctx.set_cipher_list('ALL:@SECLEVEL=0'.encode('utf-8'))

tls = SSL.Connection(ctx,None)

tls.set_connect_state()

while True:

try:

tls.do_handshake()

except SSL.WantReadError:

data = tls.bio_read(4096)

self.sendTDS(TDS_PRE_LOGIN, data,0)

tds = self.recvTDS()

tls.bio_write(tds['Data'])

else:

break

self.packetSize = 16*1024-1

self.tlsSocket = tls As I mentioned before, a pre-login packet is sent in which the client specifies if it supports encryption or not. If the server supports it (or requires it as well), a TLS tunnel must be created. As such, we need to first create a TLS context:

ctx = SSL.Context(SSL.TLS_METHOD)Then we initialise the ciphers list we want to use (all of them actually):

ctx.set_cipher_list('ALL:@SECLEVEL=0'.encode('utf-8'))And finally we build the TLS engine:

tls = SSL.Connection(ctx, None)

tls.set_connect_state()Once the engine is ready, we need to handle the handshake during which the client and the server determine what TLS version they will use as well as which ciphers:

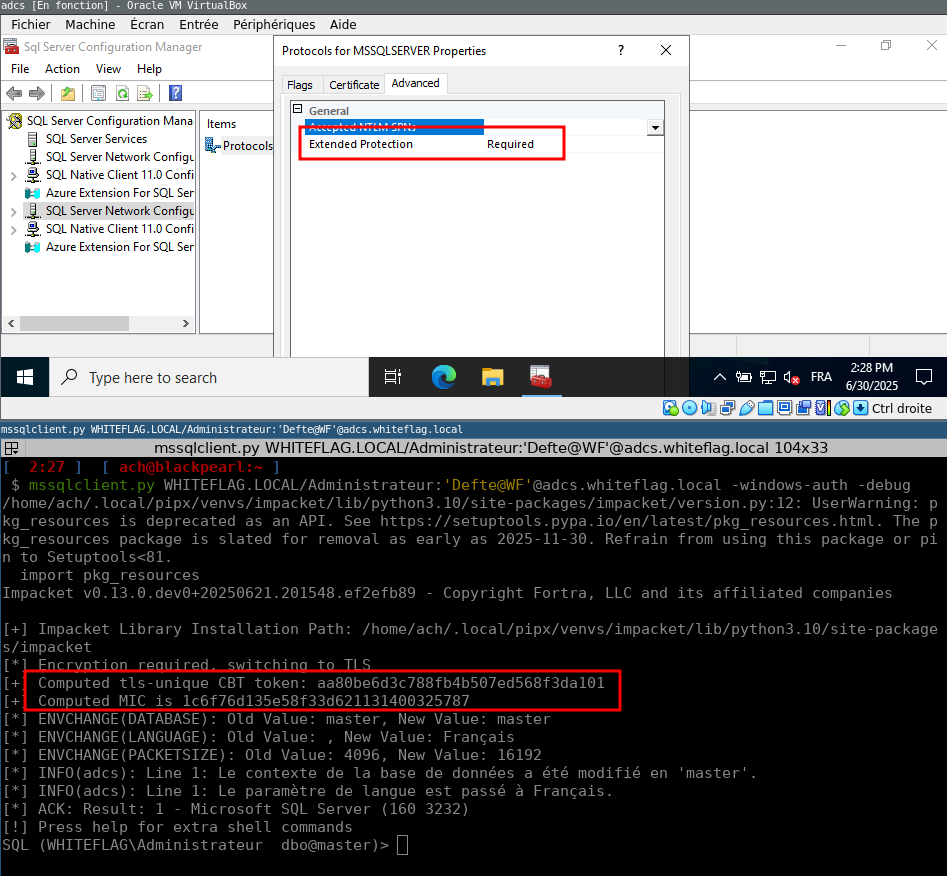

Finally, we’ll have a fully working TLS engine which we will use to encrypt the data we want to send to the server and decrypt data that we want to read from it. Now if you want a better look at how I handled that, you can take a look at the PR. The logic itself is not that complicated but keep in mind that since MSSQL servers do not always support TLS, I had to rewrite part of the entire socket mechanism so that it works with both a TLS context and without it. Overall, the general idea can be summarized with the following schema:

And here is how we can get the tls-unique value:

self.tls_unique = tls.get_channel_binding("tls-unique")And finally compute the Channel Binding Token using the almost exact same piece of code that we saw earlier:

def generate_cbt_from_tls_unique(self) -> bytes:

channel_binding_struct = b""

initiator_address = b"\x00" * 8

acceptor_address = b"\x00" * 8

application_data_raw = b"tls-unique:" + self.tls_unique # This was tls-server-end-point before

len_application_data = len(application_data_raw).to_bytes(4, byteorder="little", signed=False)

application_data = len_application_data

application_data += application_data_raw

channel_binding_struct += initiator_address

channel_binding_struct += acceptor_address

channel_binding_struct += application_data

cbt_token = md5(channel_binding_struct).digest()

LOG.debug(f"Computed tls-unique CBT token: {cbt_token.hex()}")

return cbt_tokenEnable TLS and Extended Protection on the MSSQL server, launch mssqlclient.py and…

Here is our shell!

5/ Conclusion

Although we now have the ability to connect to MSSQL databases that require CBT, we’re not done yet. While reviewing the PR, gabrielg5 (thanks for the review, mate — that was really fun) and I realised that even though tds.py now supports CBT, it still doesn’t handle the latest TDS protocol version (the eighth), which differs from the one we worked on (the seventh).

In fact, version 8 of TDS no longer relies on the STARTTLS mechanism. Instead, it establishes a direct TLS socket to the MSSQL server. This means that, moving forward, we’ll need to develop a tds.py script capable of handling cleartext communication, encrypted communication via in-memory TLS computations, and direct TLS sockets…

Yeah, there’s still a lot of work to do – and headaches – ahead but that will be for later considering that TDS 8 is only supported by MSSQL 2022 and 2025 which leaves me time to integrate all these changes into NetExec for easier internal pownage ;)!

Happy hacking, folks!

Appendix

The NTLM parser.

import binascii

import struct

import datetime

from sys import argv

# NTLM Flags

NTLM_FLAGS = {

0x00000001: "NEGOTIATE_UNICODE",

0x00000002: "NEGOTIATE_OEM",

0x00000004: "REQUEST_TARGET",

0x00000010: "NEGOTIATE_SIGN",

0x00000020: "NEGOTIATE_SEAL",

0x00000040: "NEGOTIATE_DATAGRAM",

0x00000080: "NEGOTIATE_LM_KEY",

0x00000200: "NEGOTIATE_NTLM",

0x00000800: "NEGOTIATE_ANONYMOUS",

0x00001000: "NEGOTIATE_OEM_DOMAIN_SUPPLIED",

0x00002000: "NEGOTIATE_OEM_WORKSTATION_SUPPLIED",

0x00008000: "NEGOTIATE_ALWAYS_SIGN",

0x00010000: "TARGET_TYPE_DOMAIN",

0x00020000: "TARGET_TYPE_SERVER",

0x00040000: "TARGET_TYPE_SHARE",

0x00080000: "NEGOTIATE_EXTENDED_SESSIONSECURITY",

0x00100000: "NEGOTIATE_IDENTIFY",

0x00400000: "REQUEST_NON_NT_SESSION_KEY",

0x00800000: "NEGOTIATE_TARGET_INFO",

0x02000000: "NEGOTIATE_VERSION",

0x20000000: "NEGOTIATE_128",

0x40000000: "NEGOTIATE_KEY_EXCHANGE",

0x80000000: "NEGOTIATE_56",

}

# AV_PAIRS Flags

AV_IDS = {

0x00: "MsvAvEOL",

0x01: "MsvAvNbComputerName",

0x02: "MsvAvNbDomainName",

0x03: "MsvAvDnsComputerName",

0x04: "MsvAvDnsDomainName",

0x05: "MsvAvDnsTreeName",

0x06: "MsvAvFlags",

0x07: "MsvAvTimestamp",

0x08: "MsvAvSingleHost",

0x09: "MsvAvTargetName",

0x0A: "MsvAvChannelBindings",

0x0B: "MsvAvTargetFlags",

0x0C: "MsvAvServicePrincipalName",

0x0D: "MsvAvTicketHint",

0x0E: "MsvAvTicketServerName",

0x0F: "MsvAvTicketRealmName",

0x10: "MsvAvTargetRestriction",

0x11: "MsvAvTargetCapability",

0x12: "MsvAvClientClaims",

0x13: "MsvAvDeviceClaims",

0x14: "MsvAvDeviceId",

0x15: "MsvAvAccountId",

0x16: "MsvAvIntegrity",

}

def decode_utf16le(value):

try:

return value.decode('utf-16le')

except Exception:

return value.hex()

def parse_av_pairs(blob):

pos = 0

print("\nAV Pairs from NTLMv2 response:")

while pos + 4 <= len(blob):

av_id = struct.unpack("<H", blob[pos:pos + 2])[0]

av_len = struct.unpack("<H", blob[pos + 2:pos + 4])[0]

pos += 4

if av_id == 0x00:

print(f"\tMsvAvEOL")

break

value = blob[pos:pos + av_len]

name = AV_IDS.get(av_id, f"Unknown_AV_ID_{av_id}")

display_value = ""

if av_id == 0x07 and len(value) == 8: # Timestamp

timestamp = struct.unpack("<Q", value)[0]

dt = datetime.datetime(1601, 1, 1) + datetime.timedelta(microseconds=timestamp / 10)

display_value = str(dt)

elif av_id == 0x06 and len(value) == 4: # MsvAvFlags

flags = struct.unpack("<I", value)[0]

flag_map = {

0x00000001: "MIC_PRESENT",

0x00000002: "TARGET_IS_UNTRUSTED",

0x00000004: "CHANNEL_BINDINGS_PRESENT",

0x00000010: "CLAIMS_SUPPORTED",

0x00000020: "SPN_CHECK_REQUIRED",

0x00000100: "CUSTOM_AV_PAIRS_PRESENT",

}

active_flags = [desc for bit, desc in flag_map.items() if flags & bit]

display_value = f"0x{flags:08X} ({', '.join(active_flags) if active_flags else 'None'})"

elif av_id == 0x08 and len(value) >= 48: # MsvAvSingleHost

machine_id = value[16:32]

display_value = (

f"\n\t\tSize : {struct.unpack('<I', value[0:4])[0]}\n"

f"\t\tZ4 : {value[4:8].hex()}\n"

f"\t\tCustom Data : {value[8:16].hex()}\n"

f"\t\tMachine ID : {machine_id.hex()}"

)

elif av_id == 0x0A: # Channel Bindings

display_value = value.hex()

else:

display_value = decode_utf16le(value)

print(f"\t{name:<25}: {display_value}")

pos += av_len

def parse_ntlm_message(hex_data):

data = binascii.unhexlify(hex_data)

def unpack_field(fmt, offset):

size = struct.calcsize(fmt)

return struct.unpack(fmt, data[offset:offset + size])[0], offset + size

print(f"\nHex NTLM Message: {hex_data}")

offset = 8

message_type, offset = unpack_field('<L', offset)

print(f"Message Type: {message_type}")

if message_type == 1:

print("Parsing NTLMSSP_NEGOTIATE")

negotiate_flags = struct.unpack("<I", data[12:16])[0]

for bit, meaning in NTLM_FLAGS.items():

if negotiate_flags & bit:

print(f"\t- {meaning}")

domain_name_length, _, domain_buffer = struct.unpack("<HHI", data[16:24])

try:

domain = data[domain_buffer:domain_buffer+domain_name_length].decode('utf-16le')

except Exception:

domain = data[domain_buffer:domain_buffer+domain_name_length].hex()

print(f"\tDomain name: {domain}")

workstation_name_length, _, workstation_buffer = struct.unpack("<HHI", data[24:32])

try:

workstation = data[workstation_buffer:workstation_buffer+workstation_name_length].decode('utf-16le')

except Exception:

workstation = data[workstation_buffer:workstation_buffer+workstation_name_length].hex()

print(f"\tWorkstation: {workstation}")

if negotiate_flags & 0x02000000 and len(data) >= 40:

version_major = data[32]

version_minor = data[33]

version_build = struct.unpack("<H", data[34:36])[0]

ntlm_revision = data[39]

print(f"\tVersion: {version_major}.{version_minor} {version_build} {ntlm_revision}")

if message_type == 2:

print("Parsing NTLMSSP_CHALLENGE")

target_name_len, _, target_name_offset = struct.unpack("<HHI", data[12:20])

negotiate_flags = struct.unpack("<I", data[20:24])[0]

print(f"\tNegotiate Flags: 0x{negotiate_flags:08x}:")

for bit, meaning in NTLM_FLAGS.items():

if negotiate_flags & bit:

print(f"\t- {meaning}")

server_challenge = data[24:32]

print(f"\tServer Challenge: {server_challenge.hex()}")

reserved = data[32:40]

print(f"\tReserved: {reserved.hex()}")

target_info_len, _, target_info_offset = struct.unpack("<HHI", data[40:48])

target_name_bytes = data[target_name_offset:target_name_offset+target_name_len]

try:

target_name = target_name_bytes.decode('utf-16le')

except Exception:

target_name = target_name_bytes.hex()

print(f"\tTarget Name: {target_name}")

target_info_bytes = data[target_info_offset:target_info_offset+target_info_len]

print(f"\tTarget Info (AV Pairs):")

parse_av_pairs(target_info_bytes)

if message_type == 3:

print("Parsing NTLMSSP_AUTHENTICATE")

fields = {}

names = ["lanman", "ntlm", "domain", "user", "host", "session_key"]

for name in names:

length, offset = unpack_field('<H', offset)

max_length, offset = unpack_field('<H', offset)

ptr, offset = unpack_field('<L', offset)

fields[name] = {'length': length, 'offset': ptr}

flags, offset = unpack_field('<L', offset)

if flags & 0x02000000:

version = data[offset:offset + 8]

if len(version) >= 8:

major, minor = version[0], version[1]

build = struct.unpack("<H", version[2:4])[0]

rev = version[7]

print(f"Version: {major}.{minor}.{build} rev {rev} ({version.hex()})")

offset += 8

mic = None

if flags & 0x00000002 == 0:

mic = data[offset:offset + 16]

offset += 16

for name in names:

field = fields[name]

field['value'] = data[field['offset']:field['offset'] + field['length']]

print(f"Flags : 0x{flags:08X}")

for bit, meaning in NTLM_FLAGS.items():

if flags & bit:

print(f"\t- {meaning}")

if mic:

print(f"MIC: : {mic.hex()}")

for name in names:

raw = fields[name]['value']

val = decode_utf16le(raw)

if name in ["session_key", "lanman"]:

print(f"{name.title():<12}: {raw.hex() if raw else '(not present)'}")

else:

print(f"{name.title():<12}: {val}")

ntlm_value = fields['ntlm']['value']

if len(ntlm_value) > 16:

ntproofstr = ntlm_value[:16]

print(f"NtProofStr : {ntproofstr.hex()}")

else:

print("NtProofStr not present (NTLM challenge response too short).")

if len(ntlm_value) > 40:

timestamp_bytes = ntlm_value[24:32]

timestamp = struct.unpack("<Q", timestamp_bytes)[0]

timestamp_str = str(datetime.datetime(1601, 1, 1) + datetime.timedelta(microseconds=timestamp / 10))

client_challenge = ntlm_value[32:40]

print(f"Client timestamp : {timestamp_str} {timestamp_bytes.hex()}")

print(f"Client challenge : {client_challenge.hex()}")

else:

print("Client challenge not present (NTLM challenge response too short).")

if len(ntlm_value) > 44:

# This is the av_pair preambule in NTLMv2 response, av_pairs starts right after that

av_sig = b'\x01\x01\x00\x00\x00\x00\x00\x00'

sig_index = ntlm_value.find(av_sig)

if sig_index == -1:

print("\nAV_PAIRS signature not found in NTLMv2 blob.")

return

blob = ntlm_value[sig_index:]

if len(blob) < 28:

print("\nNTLMv2 blob too short to contain timestamp and challenge.")

return

av_pairs_data = blob[28:]

print(f"AV_PAIRS hex: {av_pairs_data.hex()}")

parse_av_pairs(av_pairs_data)

else:

print("\nAV_PAIRS not found or NTLMv2 response too short.")

if __name__ == "__main__":

if len(argv) < 2:

print("Usage: python ntlm_message_parser.py <hex_ntlm_message>")

else:

parse_ntlm_message(argv[1])