SensePost Training in the Cloud

Picture this. Every year, a group of Plakkers (our nickname for those who work at SensePost) descended into Las Vegas with more luggage than Imelda Marcos on a shoe shopping spree. In recent years, our kit list was immense. 200+ laptops, 25 servers, screens, switches and more backup disks than one should ever carry past TSA. Often we got there days before Blackhat started and spent 24 hours making sure our networks and servers started (inevitably they never did, which meant late nights debugging).

The problem was, this wasn’t scalable and it was hard work. So after 13 years of putting ourselves through hell, we decided to make the huge leap into the cloud.

The reasons we’ve never done this before are numerous, with bandwidth limitations being the primary concern. Our complex training environments, such as full Windows Active Directory Domains, multiple Linux boxen, web-applications and wireless also added to the complexity of going fully cloud based. We want our targets to be as legitimate as you’d expect in any corporate environment, and as such going cloud meant this wasn’t often possible.

Until now.

After successful training events at BlackHat US 2015, where we trained 10% of all training attendees, BlackHat EU 2015 and a number of in house training events, it’s time to share some of the magic.

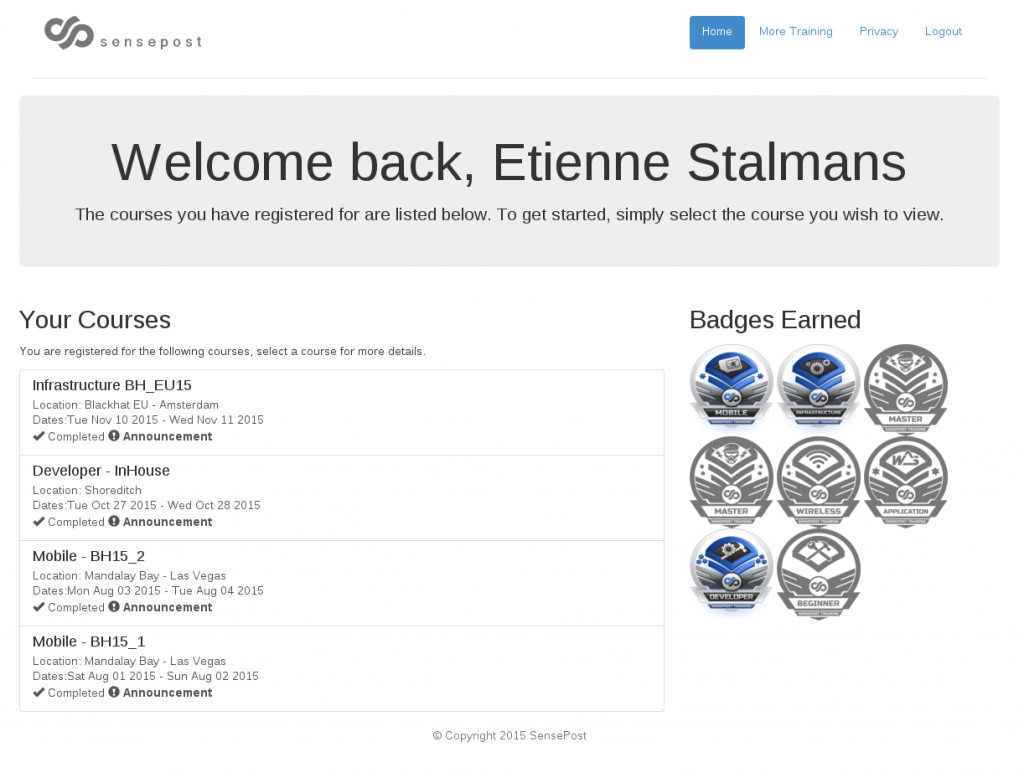

Training Portal

The first step we took to modernise the training, and facilitate the move into the Cloud, was to create a central training portal. Traditionally each course would host its own training server, with all course materials [slides, prac-sheets, tools]. This created both logistical and management nightmares, whenever content needed updating or we used a new hardware for a training event.

The training portal, still in its infancy, allowed trainers to host all course content in a single location, update this content on the fly and have new content pushed out to students automatically.

When a course was given multiple times, it was a simple process to clone the previous version of the course and update the necessary components. We’ve also adopted hipster languages and frameworks, because, well…

The new portal also allows students to register for multiple courses and track their progress through all the courses offered by SensePost. The content hosted in the training portal remains accessible to students long after a course has completed, further enhancing the training experience.

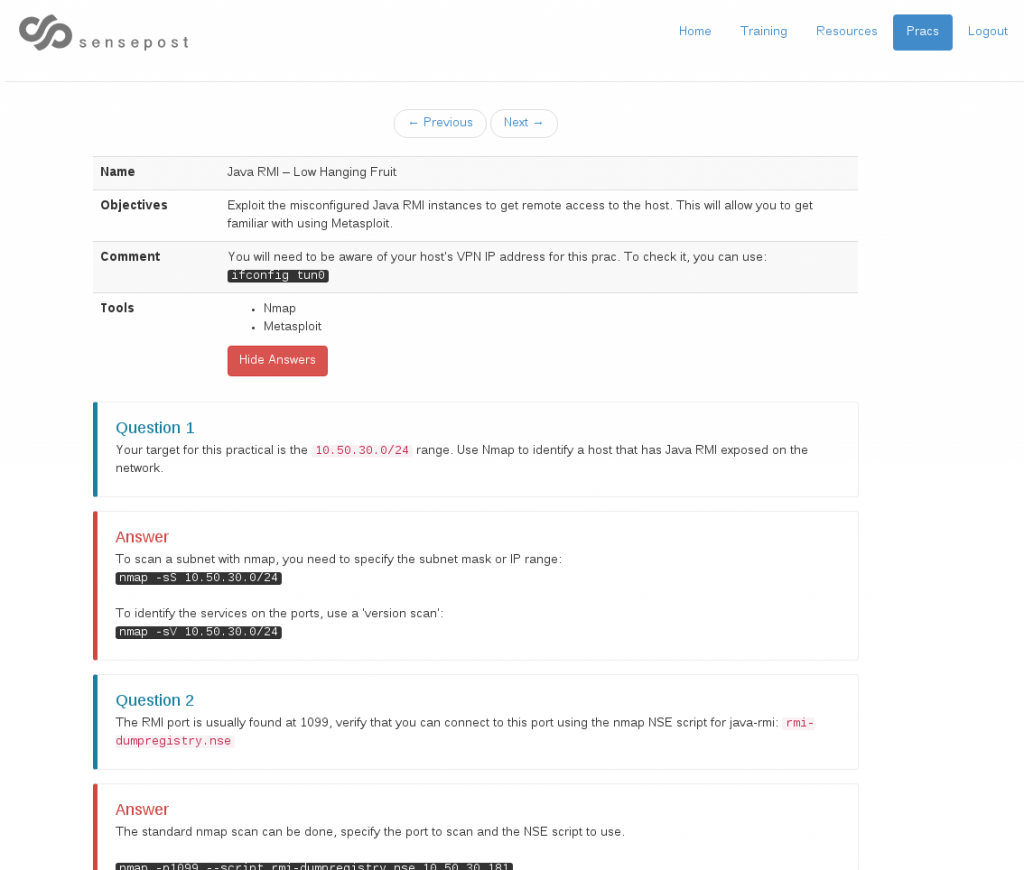

A major improvement to the training experience introduced by the portal is the new practical (laboratory exercises) sheets. Gone are the PDFs and DOCs that students need to download. No longer are there separate question and answer sheets. No longer do trainers have to update, upload and distribute prac sheets each with each course. Students get to see all practical exercises available to them and once the class has completed the exercise, a trainer can make the answers visible to students. This allows students to work through the pracs at their own pace, outside of the class-room environment.

Another added benefit is that students get access to prerequisite content, or look at the slides beforehand. We want people to come prepared, ready to learn and this new portal allows for that.

Cloud Infrastructure

The next step in upgrading SensePost training was to move all our servers into the cloud. This meant taking practical exercises that existed in multiple forms, on multiple servers and recreating them on cloud infrastructure. No mean feat when you think we have seven courses, each with between 10 and 20 practicals.

Training 1.0:

To give you a small idea of how prac exercises used to run, a brief guide to running the old training environment:

- Start Prac server(s)

- Start Windows Server VM(s)

- Start Linux Server VM(s)

- Start Class firewall

- Configure firewall

- ./setfw.sh open

- Start Prac 1

- Run ./start-prac1.sh (on prac server)

- Test that all is working. Something broke? cry, shout, go to step 2, test again.

- Reconfigure firewall for Prac 1

- ./setfw.sh prac1

- Stop Prac 1

- Run ./stop-prac1.sh (on prac server)

- Test that everything has reverted

- Repeat from step 3.

Now this may not seem overly complicated, but after 13 years of training, we sat with multiple versions of each ‘start-pracX.sh’ script (and for each course these were named/configured differently) – these scripts had numerous nuances that only the original authors understood and making any modifications typically resulted in 6 hours of troubleshooting.

The second issue was that each class, of say 40 students, would share a single target server. This meant that one person was able to ruin the experience for 39 other students, simply through a ‘; DROP TABLES — or launching the wrong exploit, etc.

Training 2.0:

With our proposed training infrastructure architecture, we aimed to create a ‘click-once-and-deploy’ solution, that allowed a trainer to start the prac servers and get on to the important stuff, training.

We also wanted to give each student their own training environment, allowing for as much haxory and experimentation, without other students being impacted. Finally, we wanted this to be scalable, if we had 10 students, we create 10 environments, 50 students? No problem!

To accomplish our first goal we turned to Amazon AWS and all of the awesomeness offered. Existing pracs and new pracs were deployed into AWS, with a few auto-init scripts. A custom VPC allowed multiple servers to communicate. Save the server as an AMI and now we could move our pracs around the world, deploy in any region and train anyone, anywhere (given sufficient connectivity).

Next we needed to ensure that each student had their own environment. Again, this was simple to implement in AWS, we simply needed to host a VPN server for each student, create a DNS hostname for that server (using Route53) and asking students to connect to the VPN server. Once a VPN connection was established, students could access all the servers connected to that VPN. Goal 2 accomplished.

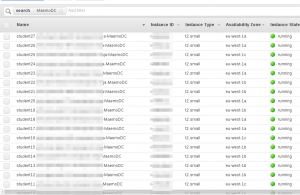

Finally to make this scalable, we went with CloudFormation. Now CloudFormation is just unbelievably awesome. By configuring stacks in cloudformation ( a simple JSON document) we could define which servers and VPC’s should be started for each course. Then it is as simple as requesting multiple stacks to be spawned. Launch the infrastructure training for 30 students? Awesome:

./aws-infrastructure deploy 30

Simple.

Case-Study; Infrastructure Training:

One of the more complex training environments we came up with was for our Enterprise Infrastructure Hacking Bootcamp. With this course we aim to introduce students to hacking infrastructure, starting with footprinting and ending in Domain Admin on a corporate network.

For this we needed vulnerable Linux targets, multiple Windows boxes and have all of these connected to a corporate domain. This is where the cloud became really fun.

Because we were feeling particularly hipster, we even through in a bit of Docker magic. Now we could run each Linux prac, in a docker container. This meant that a single server could host multiple pracs, without any of those practicals interfering with each other. For the Windows based exercises we needed to deploy multiple hosts. Again, using AWS made this super simple and we were able to create a Domain Controller, Exchange server, SQL server and a few others with minimal effort.

When spinning up 27 instances for Blackhat Europe, it took 8 minutes to have the full class setup. This is a massive change from the two days of onsite setup we needed to do for previous training events!

During the training we had a student crash the SQLServer box they were exploiting, now instead of this causing chaos and preventing everyone else from working. Now we simply rebooted the EC2 instance that was at fault and the student could continue exploiting this with only a 2 minute break in pwnage. Similarly, we had one VPC hit a wobble on day two of training, the fix? Just spin up a new instance! Again, after 5 minutes of downtime, the student was back up and running and exploiting as if nothing had happened. In the meantime, everyone else got to carry on with their practical exercises.

Training Tactics

Training large groups of students can be challenging. You need to cater for a wide variety of skill levels. Either way, we as trainers have to find the balance so that the course isn’t moving too fast or too slow. Having CTFs running throughout our courses, for those who are far ahead, has proven to be an absolute winner, especially in BlackHat EU, where the majority were pretty skilled.

While we’re giving courses there is often a lot of theory which we have to plough through. For some of us it may seem exciting because we know the stuff and we could be very enthusiastic about it, but take note of the dude sleeping on his desk in the back row. It’s called “Death By PowerPoint” and we don’t want it.

The best approach is to make the theory as practical as possible and break away from slides as much as you can to demonstrate. For example, if you’re talking about the different types of Nmap scans you could mumble on about SYN and full TCP or show the guys how it works. This will keep the flow of your training dynamic, which is key. It will also move our training away from having “theory sections” and “practical sections” to a full course of pure pwnage.

Going Forward

Now that we have got the training deployed in the cloud, and things just work, it’s time to move forward and improve. There are multiple projects in the pipeline, with the biggest being an upgrade to the training portal. The new training portal will be designed to allow registration outside of our usual training events, signup, payment and then students have full access to the training course. The next bit of awesomeness will be to allow registered students to control their own training environment, in other words, want to do training between 2am and 5am? Sure, log in to the training portal, click launch training, and wait for your instances to be launched. VPN credentials will be presented to the student and they then have full access and control over their own training environment.

Conclusion

We learned a lot ourselves moving to the cloud and solved a bunch of really complex problems in the process. For example, we managed to port our wireless course (hello, Wi-Fi hacking!) into AWS, which will be the subject of its own blog post shortly.

What we did learn is that, no matter how much we make fun of the “cloud” buzzword, the Cloud is awesome.