This is an update on this previous post on foreign NT hashes where I got things a little wrong by believing the source encoding matters for an NT hash. It doesn’t really, let me show you why.

I spent a bit of time exploring further, in particular, I took it down to a test case. Jameel gave me his name as a password in Arabic:

“echo d8acd985d98ad9842031|xxd -ps -r” can give it to you straight

That’s Jameel1 in Arabic. It’s encoded in UTF8 in most places, whose bytes are:

d8 ac d9 85 d9 8a d9 84 20 31

The last two bytes are a space 0x20 and 1 0x31 and encoded in one byte each, but the Arabic characters are encoded with two bytes each. So Jameel’s name is only 4 Arabic characters, that’s efficient!

Right, so if we encode it using code page 1256 aka the Windows Arabic code page, we get the following bytes:

cc e3 ed e1 20 31

If we turn that into a NT hash using a Windows 10 VM and checking the resulting hash with Mimikatz, we get:

63123766334a1bf784d4f123e0f4ab71

Wanting to save myself the trouble of a VM, I checked if python can produce the hash for me, and it can with the following command:

python3 -c 'import hashlib,binascii; print(binascii.hexlify(hashlib.new("md4","Jameel1".encode("utf-16le")).digest()))'

(The code above has “Jameel1” in it instead of the UTF8, because of blog engine UTF8 problems)

And it did! Next up, I simply tried cracking that hash with a wordlist containing the clear. The result? hashcat failed to crack it! Whereas john succeeded. That’s pretty useful to know!

However, I was unsatisfied with being forced to use john only, and wanted to know why hashcat wasn’t cracking it.

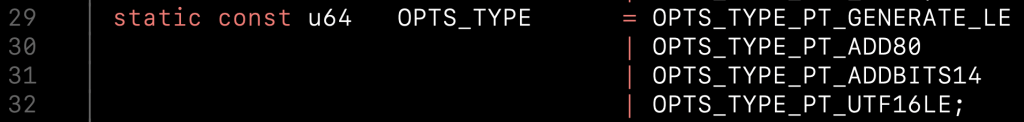

If you look at the python above, an NThash is made by encoding something in UTF16-LE then MD4 hashing it. So, next I had a look at what hashcat does in the NThash module hashcat/src/modules/module_01000.c and in it you see:

Ok, so it’s doing the UTF16LE encoding for us, so if you send it a UTF8 or CP1256 clear, it’ll convert it to mostly the same UTF16LE. I double checked, if you start with CP1256 or UTF8 bytes, for Jameel’s password, you get the same UTF16LE result, which is:

2c06 4506 4a06 4406 2000 3100

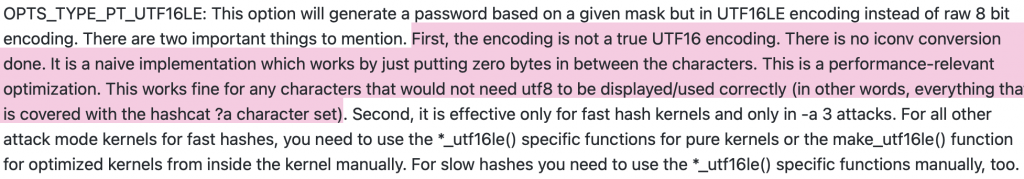

The reason why this can’t handle non-ASCII is explained in the hashcat plugin development guide:

Ok, so if we want to crack this password specifically, using bytes, we need to crack MD4 (mode 900) not NTHash (mode 1000), and we need to pass those bytes in exactly using --hex-charset, and voila, it cracks!

63123766334a1bf784d4f123e0f4ab71:$HEX[2c0645064a06440620003100]

So, we have a way to crack Arabic, the source encoding doesn’t really matter because hashcat doesn’t UTF16LE encode them properly for an, as yet unfigured out reason and because we can skip that step entirely and just assume we’re dealing with UTF16LE in a brute force. If you look into UTF16 encoding, you’ll see the Arabic characters are mostly in the 0600 - 06FF range, and Latin numbers are the range 0030 - 0039. We’re looking at little endian UTF16, hence the switch from 0600 to 0006. So if we want to crack Jameel’s password more generically, we could do this:

hashcat -m900 hash -a3 --hex-charset -1 06 -2 00 -3 2030313233343536373839 ?b?1?b?1?b?1?b?1?3?2?3?2

This creates two pairs of hex to brute force. The first is ?b?1 which will brute the entire keyspace of 0006 - ff06, i.e. Arabic characters, and ?3?2 which will brute force 3000 - 3900 as well as 2000 i.e. Latin numbers and a space.

This is obviously very slow, attempting just six Arabic characters on my laptop, cracking at 2GH/s estimates 1 day, 14 hours.

I learned a bit following this rabbit hole and hope it’s useful for someone else.